Tokenization is the process of breaking down a piece of text into small units called tokens. A token may be a word, part of a word or just characters like punctuation.

It is one of the most foundational NLP task and a difficult one, because every language has its own grammatical constructs, which are often difficult to write down as rules.

Introduction

Tokenization is one of the least glamorous parts of NLP. How do we split our text so that we can do interesting things on it.

Despite its lack of glamour, it’s super important.

Tokenization defines what our NLP models can express. Even though tokenization is super important, it’s not always top of mind.

In the rest of this article, I’d like to give you a high-level overview of tokenization, where it came from, what forms it takes, and when and how tokenization is important.

Why Is It Called Tokenization?

Let’s look at the history of tokenization before we dive deep into its everyday use. The first thing I want to know is why it’s called tokenization anyway.

Natural language processing goes hand in hand with “formal languages,” a field between linguistics and computer science that essentially studies programming languages’ language aspects.

Just like in natural language, formal languages have distinct strings that have meaning; we often call them words, but to avoid confusion, the formal languages people called them tokens.

In other words, a token is a string with a known meaning.

The first place to see this is in your code editor.

When you write def in python, it will get colored because the code editor recognized def as a token with special meaning. On the other hand, if you wrote “def,” it would be colored differently because the code editor would recognize it as a token whose meaning is “Arbitrary string”.

def foo:

"def foo"

Modern Natural Language Processing thinks about the meaning of tokens a little differently. As we’ll see below, modern tokenization is less concerned with a token’s meaning.

Why Do We Tokenize?

Which brings us to the question, why do we even need to tokenize when we do NLP?

At first glance, it seems almost silly. We have a bunch of text, and we want to computer to work on all the text, so why do we need to break the text into small tokens?

Programming languages work by breaking up raw code into tokens and then combining them by some logic (the program’s grammar) in natural language processing.

By breaking up the text into small, known fragments, we can apply a small(ish) set of rules to combine them into some larger meaning. In programming languages, tokens are connected via formal grammars.

But in natural language processing, different ways of combining tokens have evolved over the years alongside an array of methods to tokenize. But the motivation behind tokenization has stayed the same, to present the computer with some finite set of symbols that it can combine to produce the desired result.

Do Tokens Have Meaning?

While in programming languages, a token like def has a well-defined meaning, natural language is a little more subtle, and saying whether a token has meaning becomes a profound philosophical question.

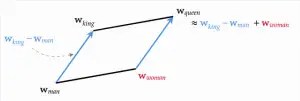

Algorithms like Word2Vec or GloVe, which assign a vector to a token, have gotten us used to the idea that tokens have meaning. You can do “King-man +woman” and get the vector for queen. That seems pretty meaningful.

It’s important to remember that Word2Vec and its variants are essentially a map from tokens, e.g., symbols, to vectors – lists of numbers. The implication is that the “meaning” captured and what can have meaning is dependent on how you tokenized the text, to begin with. We’ll see a few examples of that below.

Why Is Tokenization Hard

I’m a native Hebrew speaker, and Hebrew is a funny language. The word ×ספר could mean The book, The barber, The Far Off Land, or The Story.

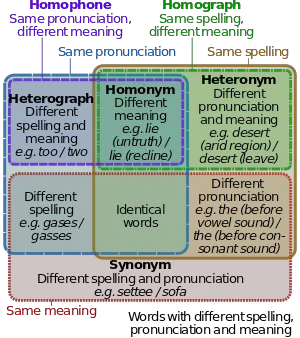

These are called “Heteronyms” words that are spelled the same but have a different meaning and sound differently. Heteronyms can be problematic in natural language processing because we assign all of the different meanings to the same token, our NLP algorithms might not be able to distinguish them.

One of my favorite examples of tricky tokenization is the following:

“What has four letters never has five letters but sometimes has nine letters.”

If you notice the period at the end, you’ll see that it’s not a question.

This example raises three distinct tricky issues for natural language processing.

First, does your tokenization process preserve punctuation? Many don’t because punctuation is often not part of a word.

Second, we encounter a variant of the Heteronym problem: what, never, and sometimes aren’t used here in their meaningful sense but rather as arbitrary strings.

Finally, we see again that the meaning of a token is often dependent on the context that it is presented. As Firth said, “A Word will be known by the company it keeps.”

A second consideration with tokenization is the vocabulary size, the number of different tokens our systems recognize. In almost all NLP systems, the vocabulary is an upfront decision that can’t be expanded or updated in the future.

At first glance, it’s natural to want to capture “all the tokens” so that we never miss anything.

However, this choice often comes with a cost. Having a large number of scarce tokens makes NLP systems prone to over-fitting. Also, for systems that generate text, the computational cost of training and inference grows with the number of tokens and quickly becomes a barrier. See the paper [Strategies for Training Large Vocabulary Neural Language Models][5-strategies-for-large-vocab] for more details and methods to overcome that problem.

What Are The Different Kinds Of Tokenization used in NLP?

Recent NLP methods, powered by deep learning, have interpreted tokens within the context that they appear, including very long contexts.

That ability mitigates the Heteronyms problem we saw above and also makes NLP systems more robust in the face of rare tokens because the system can infer their “meaning” based on their context.

These relatively new capabilities in the world of NLP have changed the way we tokenize text. Non- deep learning systems typically tokenize with a pipeline approach.

First, the text is split into token candidates (naively by splitting on white spaces or with more sophisticated heuristics) and then merging similar tokens (Walked and walking would both be merged into walk) and removing noisy tokens (such as an, the, I).

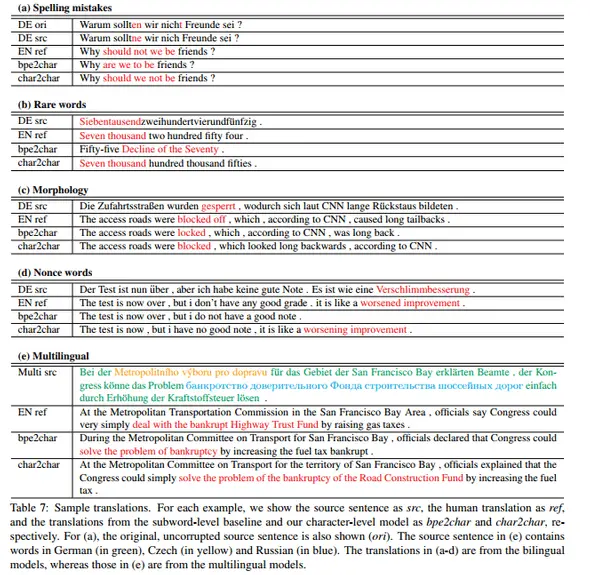

More modern tokenization schemes, often called subword tokenizers, such as the Sentence piece or BPE algorithms, split and merge tokens into more complicated forms.

For example, BPE is a tokenization scheme that supports an unbounded vocabulary by expressing some things we’d perceive as tokens as pairs of tokens or more. For example, sometimes you’ll see a letter, but BPE will tokenize that letter into two or even three tokens.

One emerging trend is to forgo tokenization nearly entirely and run NLP algorithms at the character level.

That means that our models process characters and need to learn their meaning simplicity and deal with much longer sequences. Character level NLP allows us to skip the subtleties of tokenization and avoid mistakes that it may introduce, sometimes to remarkable results.

Often at the start of an NLP project, the tokenization scheme’s nuances aren’t the most crucial issue to be dealt with. However, as projects evolve, understanding and adjusting the tokenization scheme is often one of the easiest ways to improve model performance.

Tokenization And Annotation

Modern NLP is fueled by supervised learning. We annotate documents to create training data for our ML models. When dealing with token classification tasks, also known as sequence labeling, it’s crucial that our annotations align with the tokenization scheme or that we know how to align them downstream.

LightTag

My company, LightTag, makes text annotation tools, and our customers build NLP models with the resulting annotations. We’ve seen time and time again that nailing the tokenization is the highest ROI activity after annotation itself for complex texts and annotation schemes.

That’s because the downstream model can only “see” and output tokens according to the tokenization scheme, and when that scheme doesn’t perfectly align with the modelers’ goal, the results are less than perfect.

Today, many companies start their annotation work after selecting a model and try to annotate according to its tokenization constraints. As a parting piece of advice, do the opposite. When you annotate text, do so according to your desired outcomes, and later select models and tokenization schemes that align to that outcome.