Text Classification is the process categorizing texts into different groups. SpaCy makes custom text classification structured and convenient through the textcat component.

Text classification is often used in situations like segregating movie reviews, hotel reviews, news data, primary topic of the text, classifying customer support emails based on complaint type etc. For many real-life cases, training a custom text classification model proves to be more accurate. This article shows you how to build a custom text classifier using the spaCy library

Training Custom Text Classification Model in spaCy. Photo by Jesse Dodds.

Training Custom Text Classification Model in spaCy. Photo by Jesse Dodds.

Contents

- What is Custom text Classifier model?

- Getting started with custom text classification in spaCy

- How to prepare training data in the desired format

- How to write the evaluation function?

- Training the model and printing evaluation scores

- Test the model with new examples

Introduction

Let’s looks at a typical use case for Text Classification.

I’m sure each of us would have filled in a feedback after buying a book from amazon or ordering food from Swiggy. These reviews help them to analyze the problems and improve the service. But let’s take a peek into this process. There are millions of reviews filled out by customers, is it possible to manually go through each of them and see if it’s an appreciation or a negative review?

Of course, No! The first step would be to classify all the reviews into positive and negative categories. Then, you can easily analyze how many people were not satisfied and why. This process of categorizing texts into different groups/labels is called Text Classification.

Text Classification can be performed in different ways. Here, we’ll use the spaCy package to classify texts. spaCy is has become a very popular library for NLP and provides state-of-the-art components. For practical cases, it is mostly preferred to use a trained Custom model for classification. Let me first introduce to you what is a custom model and why we need it in the next section.

What is a custom Text Classifier model?

Let’s say you have a bunch of movie reviews/customer reviews. You wish to classify each review as positive or negative. If you use the default categorizer of spaCy, the result is not likely to be great. Instead, what if you collect labeled data set of movie/customer reviews and train your model on that?

The results will be far better and accurate! You can do this by training a custom text classifier. You first train it on relevant labeled datasets and made ready for our use in similar context. It’s very helpful especially in cases where the amount of data is huge.

In the next sections, I’ll guide you step-by-step on how to train your text classification model in spaCy.

Getting started with custom text classification in spaCy

spaCy is an advanced library for performing NLP tasks like classification. One significant reason why spaCy is preferred a lot is that it allows to easily build or extend a text classification model. We shall be using this feature.

Now, I’ll demonstrate how to train the text classifier using a real example. Consider you have text data containing custom reviews of cloths of a company. The task is to use this data and train our model on it. Finally, the model should be able to classify a new unseen review as positive/negative.

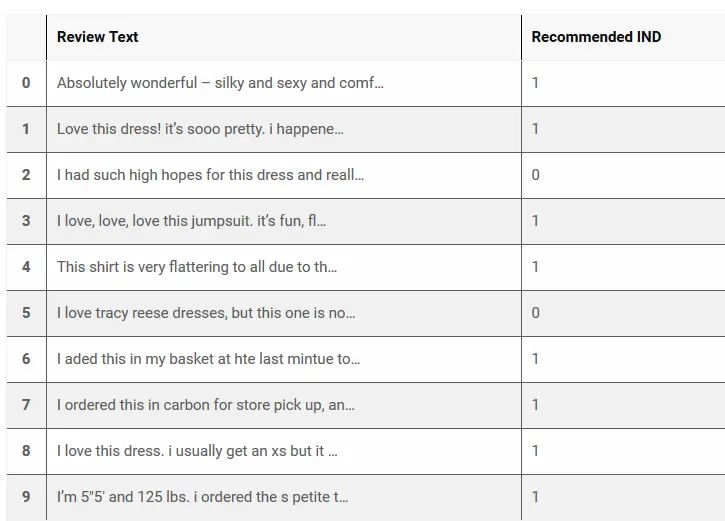

You can download the dataset containing reviews on clothes here. Read the data set into a CSV and view the contents. For our task, we require only 2 columns. The " Review Text " column containing product reviews and the "Recommended IND " column storing labels. Let’s extract these columns into a pandas data frame reviews.

# Import pandas & read csv file

import pandas as pd

reviews=pd.read_csv("https://raw.githubusercontent.com/hanzhang0420/Women-Clothing-E-commerce/master/Womens%20Clothing%20E-Commerce%20Reviews.csv")

# Extract desired columns and view the dataframe

reviews = reviews[['Review Text','Recommended IND']].dropna()

reviews.head(10)

You can observe that for positive reviews the label is 1 and for negative reviews, it is 0. We need to train the model than can categorize new unseen reviews.

This is the raw data we have got. As we are using spaCy, to train our model let’s import the package. After importing, you can load a pre-trained model like "en_core_web_sm". We will be adding / modifying the text classifier to this model later. For any spaCy model, you can view the pipeline components present in the current pipeline through pipe_names method.

# Import spaCy ,load model

import spacy

nlp=spacy.load("en_core_web_sm")

nlp.pipe_names

Output:

['tagger', 'parser', 'ner']

You can see that the pipeline has tagger, parser and NER. It doesn’t have a text classifier. So, let’s just add the built-in textcat pipeline component of spaCy for text classification to our pipeline. The add_pipe() method can be used for this.

# Adding the built-in textcat component to the pipeline.

textcat=nlp.create_pipe( "textcat", config={"exclusive_classes": True, "architecture": "simple_cnn"})

nlp.add_pipe(text_cat, last=True)

nlp.pipe_names

Output:

['tagger', 'parser', 'ner', 'textcat']

Now, we are going to train the textcat with our reviews dataset using the simple_cnn architecture.

First, you need to add the desired labels to the pipeline component. In this case, it is POSITIVE and NEGATIVE as per the reviews. Add these labels to textcat using add_label function.

# Adding the labels to textcat

textcat.add_label("POSITIVE")

textcat.add_label("NEGATIVE")

Output:

1

How to prepare training data in the desired format?

Your default model with textcat is ready, you just need to prepare the data in the required format.

You can write a function load_data() which takes a list of tuples as input. Each tuple contains the text and label value( 0 or 1 ). The below code demonstrates how to convert our reviews data set into this desired format

# Converting the dataframe into a list of tuples

reviews['tuples'] = reviews.apply(lambda row: (row['Review Text'],row['Recommended IND']), axis=1)

train =reviews['tuples'].tolist()

train[:10]

Output:

[('Absolutely wonderful - silky and sexy and comfortable', 1),

('Love this dress! it\'s sooo pretty. i happened to find it in a store, and i\'m glad i did bc i never would have ordered it online bc it\'s petite. i bought a petite and am 5\'8". i love the length on me- hits just a little below the knee. would definitely be a true midi on someone who is truly petite.',

1),

('I had such high hopes for this dress and really wanted it to work for me. i initially ordered the petite small (my usual size) but i found this to be outrageously small. so small in fact that i could not zip it up! i reordered it in petite medium, which was just ok. overall, the top half was comfortable and fit nicely, but the bottom half had a very tight under layer and several somewhat cheap (net) over layers. imo, a major design flaw was the net over layer sewn directly into the zipper - it c',

0),

("I love, love, love this jumpsuit. it's fun, flirty, and fabulous! every time i wear it, i get nothing but great compliments!",

1),

('This shirt is very flattering to all due to the adjustable front tie. it is the perfect length to wear with leggings and it is sleeveless so it pairs well with any cardigan. love this shirt!!!',

1),

('I love tracy reese dresses, but this one is not for the very petite. i am just under 5 feet tall and usually wear a 0p in this brand. this dress was very pretty out of the package but its a lot of dress. the skirt is long and very full so it overwhelmed my small frame. not a stranger to alterations, shortening and narrowing the skirt would take away from the embellishment of the garment. i love the color and the idea of the style but it just did not work on me. i returned this dress.',

0),

('I aded this in my basket at hte last mintue to see what it would look like in person. (store pick up). i went with teh darkler color only because i am so pale :-) hte color is really gorgeous, and turns out it mathced everythiing i was trying on with it prefectly. it is a little baggy on me and hte xs is hte msallet size (bummer, no petite). i decided to jkeep it though, because as i said, it matvehd everything. my ejans, pants, and the 3 skirts i waas trying on (of which i ]kept all ) oops.',

1),

("I ordered this in carbon for store pick up, and had a ton of stuff (as always) to try on and used this top to pair (skirts and pants). everything went with it. the color is really nice charcoal with shimmer, and went well with pencil skirts, flare pants, etc. my only compaint is it is a bit big, sleeves are long and it doesn't go in petite. also a bit loose for me, but no xxs... so i kept it and will decide later since the light color is already sold out in the smallest size...",

1),

('I love this dress. i usually get an xs but it runs a little snug in bust so i ordered up a size. very flattering and feminine with the usual retailer flair for style.',

1),

('I\'m 5"5\' and 125 lbs. i ordered the s petite to make sure the length wasn\'t too long. i typically wear an xs regular in retailer dresses. if you\'re less busty (34b cup or smaller), a s petite will fit you perfectly (snug, but not tight). i love that i could dress it up for a party, or down for work. i love that the tulle is longer then the fabric underneath.',

1)]

Next, you can pass the train data as input to a load_data() function.

The load_data() function performs the below functions:

– Shuffle the data using random.shuffle() function This prevents any training based on the order of examples.

– For each tuple in the input data , category is assigned as ‘POSITIVE’ or ‘NEGATIVE’ based on the label value and stored in cats

– 80 % of the input data will be used for training, whereas 20% for evaluation. You can change this proportion using the split parameter.

After defining the function , pass the list of tuples train to the above function. The function will return you the texts and cats for both training and evaluation. You can use these to get the final training data as shown in below code.

import random

def load_data(limit=0, split=0.8):

train_data=train

# Shuffle the data

random.shuffle(train_data)

texts, labels = zip(*train_data)

# get the categories for each review

cats = [{"POSITIVE": bool(y), "NEGATIVE": not bool(y)} for y in labels]

# Splitting the training and evaluation data

split = int(len(train_data) * split)

return (texts[:split], cats[:split]), (texts[split:], cats[split:])

n_texts=23486

# Calling the load_data() function

(train_texts, train_cats), (dev_texts, dev_cats) = load_data(limit=n_texts)

# Processing the final format of training data

train_data = list(zip(train_texts,[{'cats': cats} for cats in train_cats]))

train_data[:10]

Output:

[('I ordered this shirt in both white and coral. i absolutely love the design and coral color. the white was a bit see through, so i returned it. it would be cute with a cami under, but i just wanted something that did not require an extra layer. overall, it is a flattering fit and i love the detail on the back.',

{'cats': {'NEGATIVE': False, 'POSITIVE': True}}),

("I bought the extra small and although i love the style and unique color combo, it's pretty large in the collar area and without the right blouse/shirt underneath it makes my neck look really skinny. the rest of the coat is true to size, longer and straight. the design just doesn't have the right balance for me so i'm sending it back.",

{'cats': {'NEGATIVE': False, 'POSITIVE': True}}),

('At 5\'4", i guess i am just too short to pull this dress off! the colors are so pretty for summer, but there is just too much fabric... i felt like i was wearing a giant doily! however, there is not a doubt in my mind that taller girls would look fabulous in this!',

{'cats': {'NEGATIVE': True, 'POSITIVE': False}}),

("I love this top and wear it all the time. it's a gorgeous pale blue and feels amazing....it is a tighter fitting shirt so if you like looser clothes, size up",

{'cats': {'NEGATIVE': False, 'POSITIVE': True}}),

('Love the cotton knit, for the comfort, but from a distance this blouse looks dressier. the embroidery adds a nice flare. personally, i was happy that this is long enough to cover my bottom, as it gives it a nice lean look with skinny jeans and pants. i found that it ran a little bit, but choose to keep the size i ordered anyway.',

{'cats': {'NEGATIVE': False, 'POSITIVE': True}}),

("Beautiful suit. i had been eyeing this one for weeks and finally decided to just buy the darn thing. \r\nit is tiny, just beware. as in it doesn't cover much, especially on the booty. not a bad thing, but buyer beware :) if you are looking for more coverage, size up. i wear a 32d and ordered a medium top. good thing i didn't get a small. i have to get the band taken in a bit, but i need the coverage in the cup. \r\nfor the bottoms i also got a medium and usually wear a small or medium in swimwear bo",

{'cats': {'NEGATIVE': False, 'POSITIVE': True}}),

('Love these tights. fabric feels great, soft and comfy. took large so they fit perfectly at true waist for 5\'6 height. some retailer tights i have bought in large, but they are too short for 5\'6" person.\nthe color of this paid is a subtle green. looks great with black skirt and green sweater.',

{'cats': {'NEGATIVE': False, 'POSITIVE': True}}),

('I would give it a 3.5 if half star possible. like other reviews said, the material is a little cheap and back zip got stuck when i tried to unzip it before trying it on. overall, nice color and flattering look that could be dress up or down. i am 5\'8", 155lbs, 36b and m is a little snug for me on the top.',

{'cats': {'NEGATIVE': False, 'POSITIVE': True}}),

('So cute! rompers are hard because they are so cute but rarely work for all body types. this one is so super cute. the material is so soft and comfy, feels silky. i got a small and fits perfect. i\'m 5\'4" and 117lbs so it runs very true to size when most clothes from retailer run big. i love rompers but only have one other one. this is by far my favorite! i can\'t wait until it\'s much warmer and i can wear it all the time.',

{'cats': {'NEGATIVE': False, 'POSITIVE': True}}),

('I like that the top is lightweight and very comfortable and is flattering. i can see wearing this over the holidays for entertaining. it looks festive and pretty - something easy to pop on and look pulled together in a flash.',

{'cats': {'NEGATIVE': False, 'POSITIVE': True}})]

The above output shows you the format of the final desired training data.

How to write the evaluation function?

Till now, we have prepared the training data in the desired format and stored it in train_data variable. Also, we have got the text classifier component of the model in textcat. So, we can proceed to train the textcat on our train_data. But, isn’t there something we are missing?

For any model we are going to train, it’s important to check if it’s as per our expectations. This is called evaluating the model. This is an optional step but highly recommended for better results. If you recall, the load_data() function split around 20% of the original data for evaluation. We’ll be using this to test how good the training was.

So, let me guide you how to write a function evaluate() that can perform this evaluation process. We will call this evaluate() function later during training to see the performance.

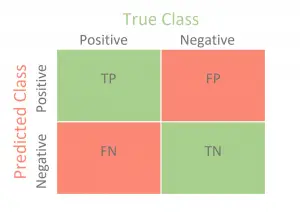

This function will take the textcat, and the evaluation data as input. For each of the text in evaluation data, it reads the score from the predictions made. And based on this, it calculates the values of True positive, True negative, False positive and false negative.

What do they above terms mean?

- True positive (tp) : If the actual label is one 1 and prediction is also 1

- True Negative (tn) : If the actual label is one 0 and prediction is also 0

- False Positive (fp) : If the actual label is one 0 , but the prediction is 1

- False Negative (fn) : If the actual label is one 1 , but the prediction is 0

How to assign a score based on these terms?

Once you get the above values based on the predictions done by the model, you can find the evaluation metric scores. These metric scores are to tell you how good the model is.

Precision is to find what percentage of the model’s positive (1’s) predictions are accurate. Let’s suppose you have a model with high precision, I also want to know what percentage of ALL 1’s were covered. This is termed as Recall

A good model should have a good precision as well as a high recall. So ideally, I want to have a measure that combines both these aspects in one single metric – the F1 Score.

F1 Score = (2 * Precision * Recall) / (Precision + Recall)

If you want to obtain more clarity on the evaluation metric, check out various evaluation metrics for classification.

The below code writes a function evaluate() that can perform the above discussed evaluation when called.

def evaluate(tokenizer, textcat, texts, cats):

docs = (tokenizer(text) for text in texts)

tp = 0.0 # True positives

fp = 1e-8 # False positives

fn = 1e-8 # False negatives

tn = 0.0 # True negatives

for i, doc in enumerate(textcat.pipe(docs)):

gold = cats[i]

for label, score in doc.cats.items():

if label not in gold:

continue

if label == "NEGATIVE":

continue

if score >= 0.5 and gold[label] >= 0.5:

tp += 1.0

elif score >= 0.5 and gold[label] < 0.5:

fp += 1.0

elif score < 0.5 and gold[label] < 0.5:

tn += 1

elif score < 0.5 and gold[label] >= 0.5:

fn += 1

precision = tp / (tp + fp)

recall = tp / (tp + fn)

if (precision + recall) == 0:

f_score = 0.0

else:

f_score = 2 * (precision * recall) / (precision + recall)

return {"textcat_p": precision, "textcat_r": recall, "textcat_f": f_score}

#("Number of training iterations", "n", int))

n_iter=10

Evaluation function is ready too! Time to get to the final training.

Training the model and printing evaluation scores

The data is stored in train_data() variable and the component in texcat.

Before we train, you need to disable the other pipeline components except textcat. This is to prevent the other components from getting affected while training. It can be accomplished through the disable_pipes() method. Next, use the begin_training() function that will return you an optimizer.

You can also fix the no of times you want to iterate the model over the examples using n_iter parameter. It should be optimum. In each iteration, we loop over the training examples and partition them into batches using spaCy’s minibatch and compounding helpers.

The minibatch() function of spaCy will return you the training data in batches. It takes the size parameter to denote the batch size. You can make use of the utility function compounding() to generate an infinite series of compounding values.

compounding()function takes three inputs which arestart( the first integer value) ,stop(the maximum value that can be generated) and finally compound. This value stored in compound is the compounding factor for the series. If you are not clear, check out this link for understanding.

For each iteration , the model is updated through the nlp.update() command. Parameters of nlp.update() are :

docs: This expects a batch of texts as input. You can pass each batch to the zip method, which will return you batches of text and annotations.-

golds: You can pass the annotations we got through the zip method here -

drop: This represents the dropout rate. -

losses: A dictionary to hold the losses against each pipeline component. Create an empty dictionary and pass it here.

The training is complete now. You can evaluate the predictions made by the model by calling the evaluate() function we defined in the previous section.

from spacy.util import minibatch, compounding

# Disabling other components

other_pipes = [pipe for pipe in nlp.pipe_names if pipe != 'textcat']

with nlp.disable_pipes(*other_pipes): # only train textcat

optimizer = nlp.begin_training()

print("Training the model...")

print('{:^5}\t{:^5}\t{:^5}\t{:^5}'.format('LOSS', 'P', 'R', 'F'))

# Performing training

for i in range(n_iter):

losses = {}

batches = minibatch(train_data, size=compounding(4., 32., 1.001))

for batch in batches:

texts, annotations = zip(*batch)

nlp.update(texts, annotations, sgd=optimizer, drop=0.2,

losses=losses)

# Calling the evaluate() function and printing the scores

with textcat.model.use_params(optimizer.averages):

scores = evaluate(nlp.tokenizer, textcat, dev_texts, dev_cats)

print('{0:.3f}\t{1:.3f}\t{2:.3f}\t{3:.3f}'

.format(losses['textcat'], scores['textcat_p'],

scores['textcat_r'], scores['textcat_f']))

Output:

Training the model…

LOSS P R F

8.673 0.906 0.962 0.933

5.705 0.911 0.961 0.935

4.112 0.918 0.957 0.937

3.117 0.920 0.954 0.937

2.515 0.923 0.950 0.937

2.048 0.922 0.948 0.935

1.886 0.926 0.945 0.936

1.496 0.922 0.946 0.934

1.416 0.923 0.945 0.934

1.094 0.924 0.949 0.936

You can observe the evaluation scores. Both training and evaluation are complete!

Test the model with new examples

Finally, here we are! The model is ready to use now.

You can create a spaCy doc of any new unseen review of clothes. The classification or prediction of it will be stored in doc.cats attribute. The Docs.cats attribute stores a dictionary that maps a label to a score for categories applied to the document.

# Testing the model

test_text="I hate this dress"

doc=nlp(test_text)

doc.cats

Output:

{'NEGATIVE': 0.9998667240142822, 'POSITIVE': 0.0001332318497588858}

You can see that the 'NEGATIVE' score is high, classifying this as a negative review. This output is as per our expectations. yay!

Conclusion

I hope you have understood how to train a custom text classification model with spaCy. This has wide applications in every field. Similar to this, spaCy also provides an option to train your own custom NER model, you can check out the post here. We are coming up with more such juicy articles, stay tuned!

Recommended:

If you liked this, you will enjoy the SpaCy for NLP course. You will gain a well grounded knowledge of working and building NLP models with spaCy, organized in this video course.