We have covered the basic concepts about linear regression. Besides these, you need to understand that linear regression is based on certain underlying assumptions that must be taken care especially when working with multiple Xs. Once you are familiar with that, the advanced regression models will show you around the various special cases where a different form of regression would be more suitable.

2. Example Problem

For this analysis, we will use the cars dataset that comes with R by default.

cars is a standard built-in dataset, that makes it convenient to show linear regression in a simple and easy to understand fashion.

You can access this dataset by typing in cars in your R console.

You will find that it consists of 50 observations(rows) and 2 variables (columns) dist and speed. Lets print out the first six observations here.

head(cars) # display the first 6 observations

#> speed dist

#> 1 4 2

#> 2 4 10

#> 3 7 4

#> 4 7 22

#> 5 8 16

#> 6 9 10The goal here is to establish a mathematical equation for dist as a function of speed, so you can use it to predict dist when only the speed of the car is known.

So it is desirable to build a linear regression model with the response variable as dist and the predictor as speed.

Before we begin building the regression model, it is a good practice to analyse and understand the variables.

The graphical analysis and correlation study below will help with this.

3. Graphical Analysis

The aim of this exercise is to build a simple regression model that you can use to predict Distance (dist).

This is possible by establishing a mathematical formula between Distance (dist) and Speed (speed).

But before jumping in to the syntax, lets try to understand these variables graphically.

Typically, for each of the predictors, the following plots help visualise the patterns:

- Scatter plot: Visualise the linear relationship between the predictor and response

- Box plot: To spot any outlier observations in the variable. Having outliers in your predictor can drastically affect the predictions as they can affect the direction/slope of the line of best fit.

- Density plot: To see the distribution of the predictor variable. Ideally, a close to normal distribution (a bell shaped curve), without being skewed to the left or right is preferred.

Let us see how to make each one of them.

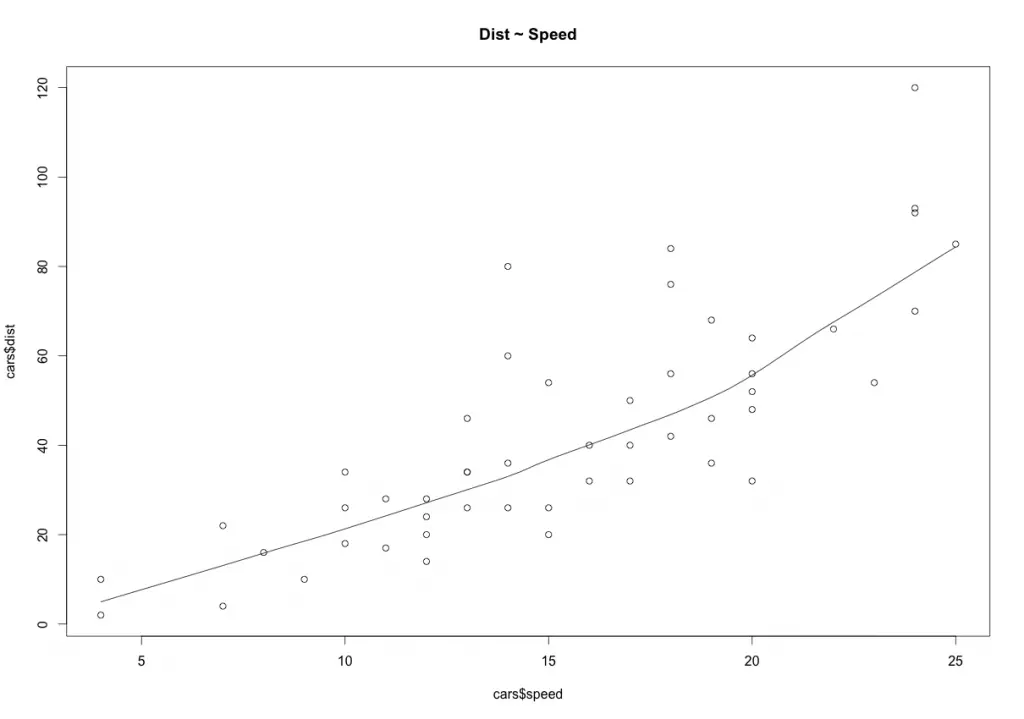

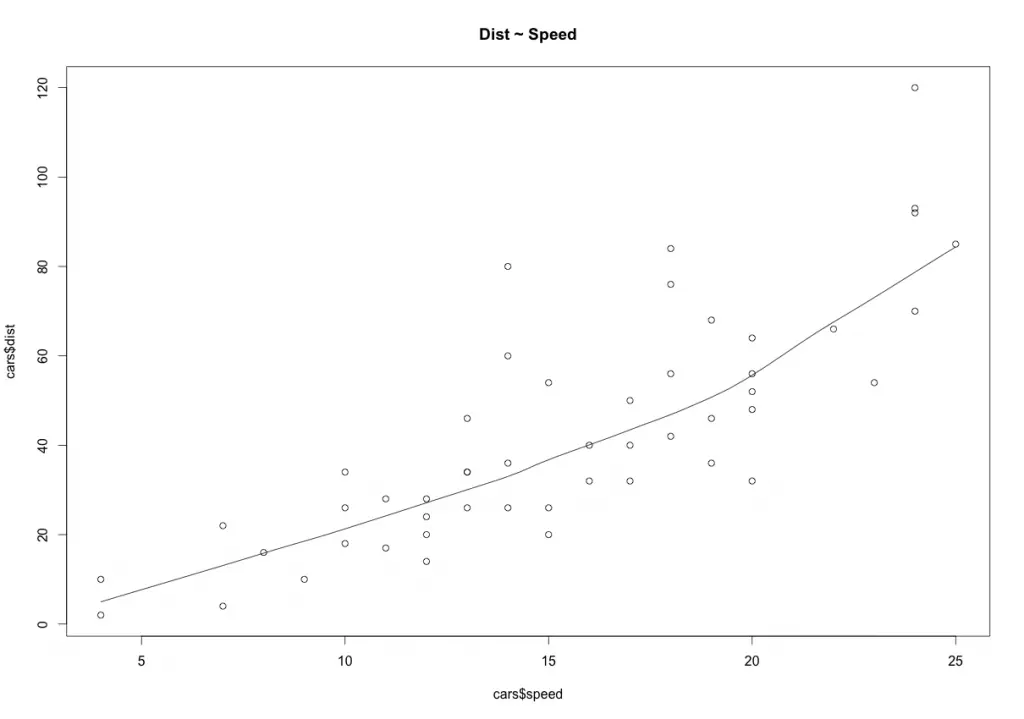

Using Scatter Plot To Visualise The Relationship

Scatter plots can help visualise linear relationships between the response and predictor variables.

Ideally, if you have many predictor variables, a scatter plot is drawn for each one of them against the response, along with the line of best fit as seen below.

scatter.smooth(x=cars$speed, y=cars$dist, main="Dist ~ Speed") # scatterplot

The scatter plot along with the smoothing line above suggests a linear and positive relationship between the dist and speed.

This is a good thing.

Because, one of the underlying assumptions of linear regression is, the relationship between the response and predictor variables is linear and additive.

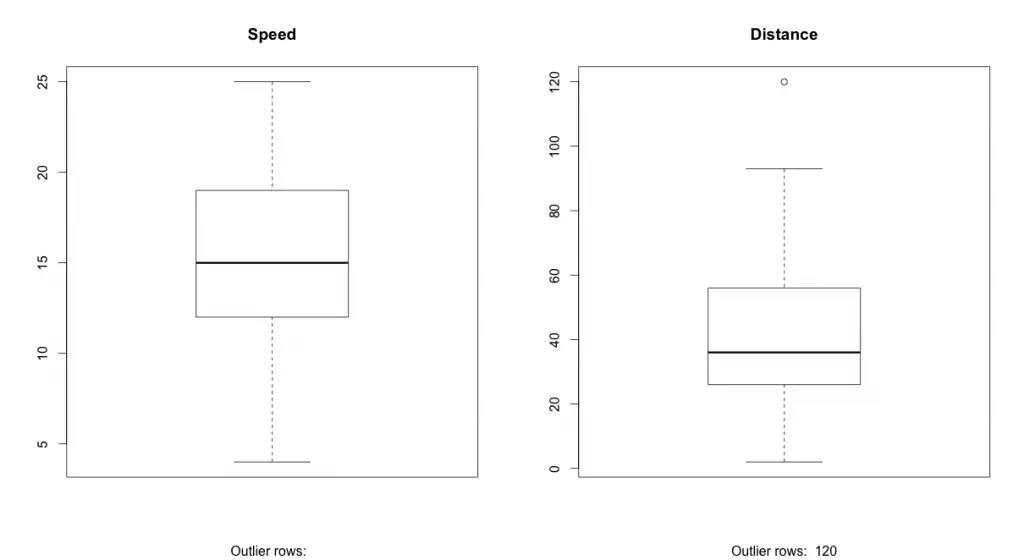

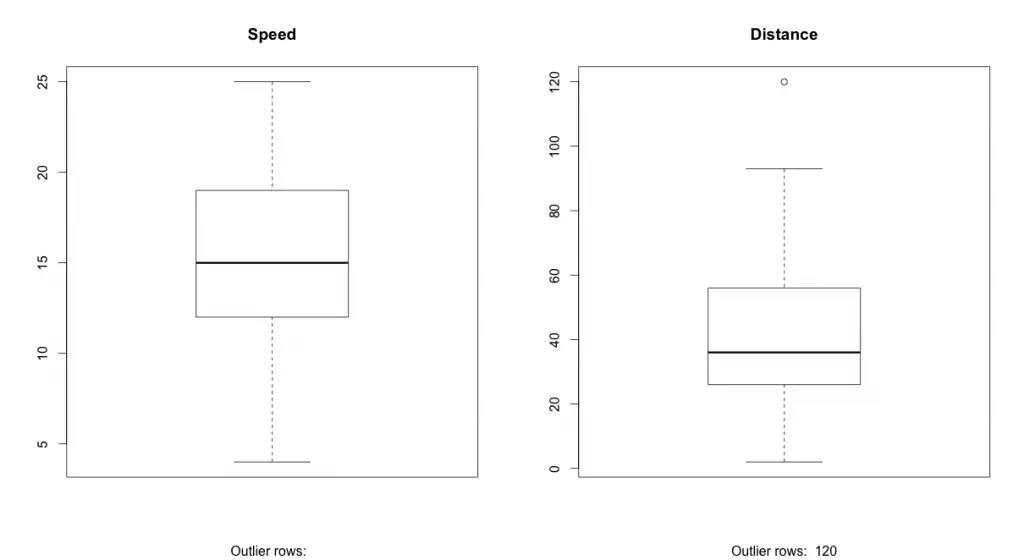

Using BoxPlot To Check For Outliers

Generally, an outlier is any datapoint that lies outside the 1.5 * inter quartile range (IQR).

IQR is calculated as the distance between the 25th percentile and 75th percentile values for that variable.

par(mfrow=c(1, 2)) # divide graph area in 2 columns

boxplot(cars$speed, main="Speed", sub=paste("Outlier rows: ", boxplot.stats(cars$speed)$out)) # box plot for 'speed'

boxplot(cars$dist, main="Distance", sub=paste("Outlier rows: ", boxplot.stats(cars$dist)$out)) # box plot for 'distance'

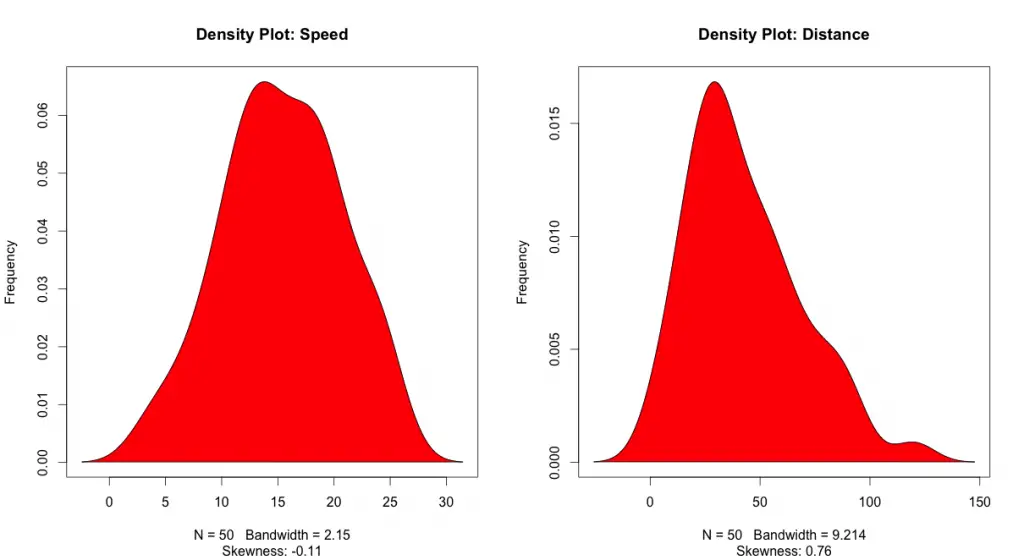

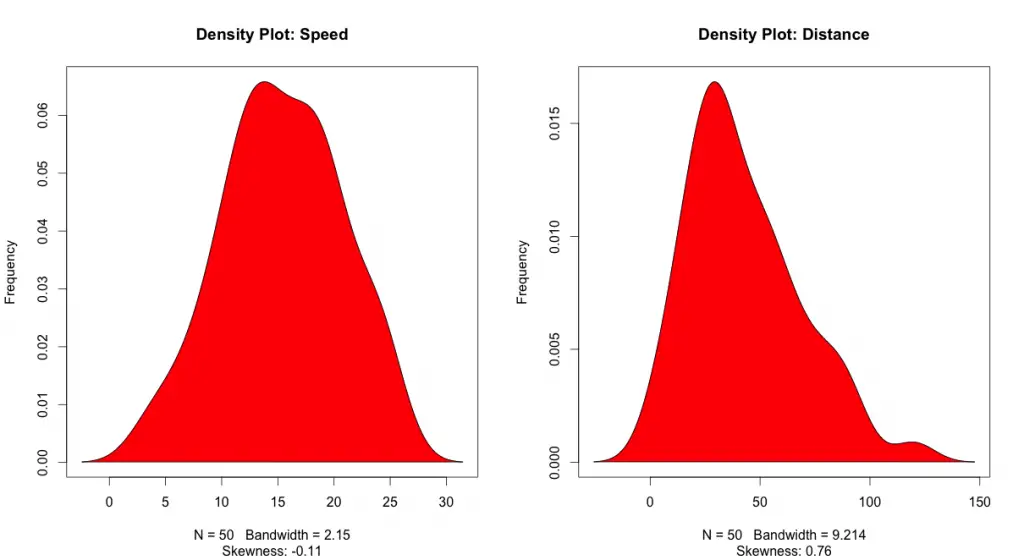

Using Density Plot To Check If Response Variable Is Close To Normal

library(e1071) # for skewness function

par(mfrow=c(1, 2)) # divide graph area in 2 columns

plot(density(cars$speed), main="Density Plot: Speed", ylab="Frequency", sub=paste("Skewness:", round(e1071::skewness(cars$speed), 2))) # density plot for 'speed'

polygon(density(cars$speed), col="red")

plot(density(cars$dist), main="Density Plot: Distance", ylab="Frequency", sub=paste("Skewness:", round(e1071::skewness(cars$dist), 2))) # density plot for 'dist'

polygon(density(cars$dist), col="red")

4. What is Correlation Analysis?

Correlation analysis studies the strength of relationship between two continuous variables. It involves computing the correlation coefficient between the the two variables.

So what is correlation? And how is it helpful in linear regression?

Correlation is a statistical measure that shows the degree of linear dependence between two variables.

In order to compute correlation, the two variables must occur in pairs, just like what we have here with speed and dist.

Correlation can take values between -1 to +1.

If one variables consistently increases with increasing value of the other, then they have a strong positive correlation (value close to +1).

Similarly, if one consistently decreases when the other increase, they have a strong negative correlation (value close to -1).

A value closer to 0 suggests a weak relationship between the variables.

A low correlation (-0.2 < x < 0.2) probably suggests that much of variation of the response variable (Y) is unexplained by the predictor (X). In that case, you should probably look for better explanatory variables.

If you observe the cars dataset in the R console, for every instance where speed increases, the distance also increases along with it.

That means, there is a strong positive relationship between them. So, the correlation between them will be closer to 1.

However, correlation doesn’t imply causation.

In other words, if two variables have high correlation, it does not mean one variable ’causes’ the value of the other variable to increase.

Correlation is only an aid to understand the relationship. You can only rely on logic and business reasoning to make that judgement.

So, how to compute correlation in R?

Simply use the cor() function with the two numeric variables as arguments.

cor(cars$speed, cars$dist) # calculate correlation between speed and distance

#> [1] 0.80689495. Building the Linear Regression Model

Now that you have seen the linear relationship pictorially in the scatter plot and through correlation, let’s try building the linear regression model.

The function used for building linear models is lm().

The lm() function takes in two main arguments:

- Formula

- Data

The data is typically a data.frame object and the formula is a object of class formula.

But the most common convention is to write out the formula directly as written below.

linearMod <- lm(dist ~ speed, data=cars) # build linear regression model on full data

print(linearMod)

#> Call:

#> lm(formula = dist ~ speed, data = cars)

#>

#> Coefficients:

#> (Intercept) speed

#> -17.579 3.932By building the linear regression model, we have established the relationship between the predictor and response in the form of a mathematical formula.

That is Distance (dist) as a function for speed.

For the above output, you can notice the �Coefficients� part having two components: Intercept: -17.579, speed: 3.932.

These are also called the beta coefficients. In other words,

dist?=?Intercept?+?(????speed)

=> dist = ?17.579 + 3.932?speed

6. Linear Regression Diagnostics

Now the linear model is built and you have a formula that you can use to predict the dist value if a corresponding speed is known.

Is this enough to actually use this model? NO!

Because, before using a regression model to make predictions, you need to ensure that it is statistically significant. But How do you ensure this?

Lets begin by printing the summary statistics for linearMod.

summary(linearMod) # model summary

#> Call:

#> lm(formula = dist ~ speed, data = cars)

#>

#> Residuals:

#> Min 1Q Median 3Q Max

#> -29.069 -9.525 -2.272 9.215 43.201

#>

#> Coefficients:

#> Estimate Std. Error t value Pr(>|t|)

#> (Intercept) -17.5791 6.7584 -2.601 0.0123 *

#> speed 3.9324 0.4155 9.464 1.49e-12 ***

#> ---

#> Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

#>

#> Residual standard error: 15.38 on 48 degrees of freedom

#> Multiple R-squared: 0.6511, Adjusted R-squared: 0.6438

#> F-statistic: 89.57 on 1 and 48 DF, p-value: 1.49e-12Using p Value To Check For Statistical Significance

The summary statistics above tells us a number of things.

One of them is the model’s p-Value (in last line) and the p-Value of individual predictor variables (extreme right column under �Coefficients�).

The p-Values are very important.

Because, we can consider a linear model to be statistically significant only when both these p-Values are less than the pre-determined statistical significance level of 0.05.

This can visually interpreted by the significance stars at the end of the row against each X variable.

The more the stars beside the variable�s p-Value, the more significant the variable.

What is the Null and Alternate Hypothesis?

Whenever there is a p-value, there is always a Null and Alternate Hypothesis associated.

So what is the null hypothesis in this case?

In Linear Regression, the Null Hypothesis (H0) is that the beta coefficients associated with the variables is equal to zero.

The alternate hypothesis (H1) is that the coefficients are not equal to zero. (i.e. there exists a relationship between the independent variable in question and the dependent variable).

What is t-value?

We can interpret the t-value something like this. A larger t-value indicates that it is less likely that the coefficient is not equal to zero purely by chance. So, higher the t-value, the better.

Pr(>|t|) or p-value is the probability that you get a t-value as high or higher than the observed value when the Null Hypothesis (the ? coefficient is equal to zero or that there is no relationship) is true.

So if the Pr(>|t|) is low, the coefficients are significant (significantly different from zero). If the Pr(>|t|) is high, the coefficients are not significant.

What this means to us?

When p Value is less than significance level (< 0.05), you can safely reject the null hypothesis that the co-efficient ? of the predictor is zero.

In our case, linearMod, both these p-Values are well below the 0.05 threshold.

So, you can reject the null hypothesis and conclude the model is indeed statistically significant.

It is very important for the model to be statistically significant before you can go ahead and use it to predict the dependent variable. Otherwise, the confidence in predicted values from that model reduces and may be construed as an event of chance.

How to calculate the t Statistic and p-Values?

When the model co-efficients and standard error are known, the formula for calculating t Statistic and p-Value is as follows:

t?Statistic=??coefficientStd.Error

In case you want to compute some of the statistics by manual code, the below snippet shows how.

# capture model summary as an object

modelSummary <- summary(linearMod)

# model coefficients

modelCoeffs <- modelSummary$coefficients

# get beta estimate for speed

beta.estimate <- modelCoeffs["speed", "Estimate"]

# get std.error for speed

std.error <- modelCoeffs["speed", "Std. Error"]

# calc t statistic

t_value <- beta.estimate/std.error

# calc p Value

p_value <- 2*pt(-abs(t_value), df=nrow(cars)-ncol(cars))

# fstatistic

f_statistic <- linearMod$fstatistic[1]

# parameters for model p-value calc

f <- summary(linearMod)$fstatistic

model_p <- pf(f[1], f[2], f[3], lower=FALSE)

#> t Value: 9.46399

#> p Value: 1.489836e-12

#> Model F Statistic: 89.56711 1 48

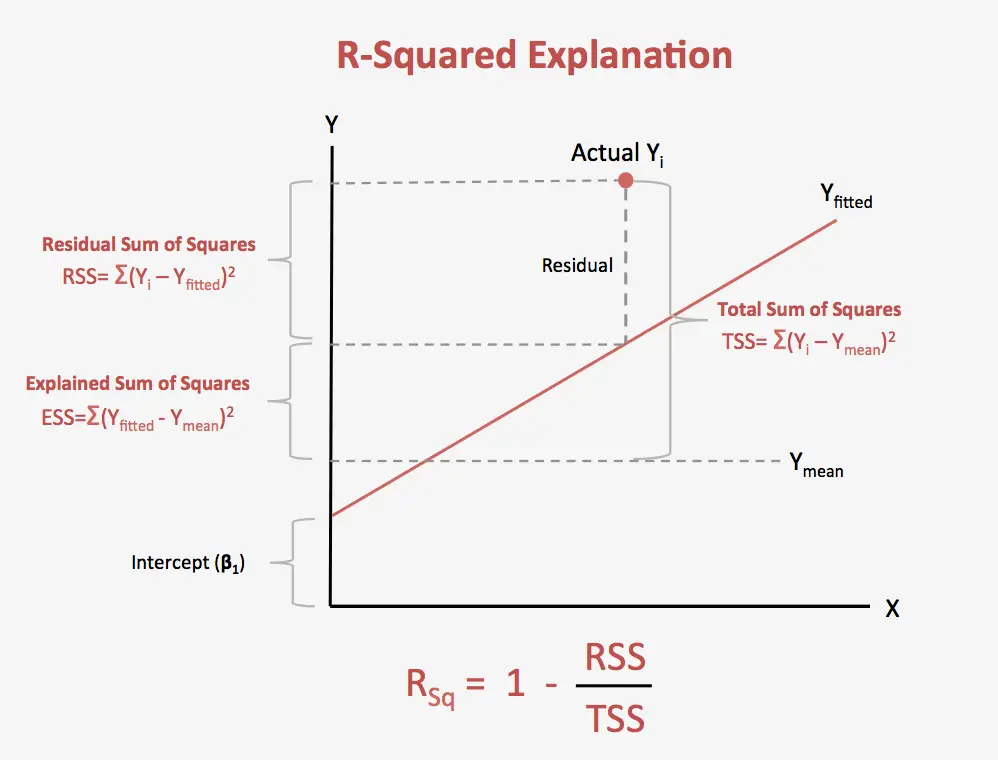

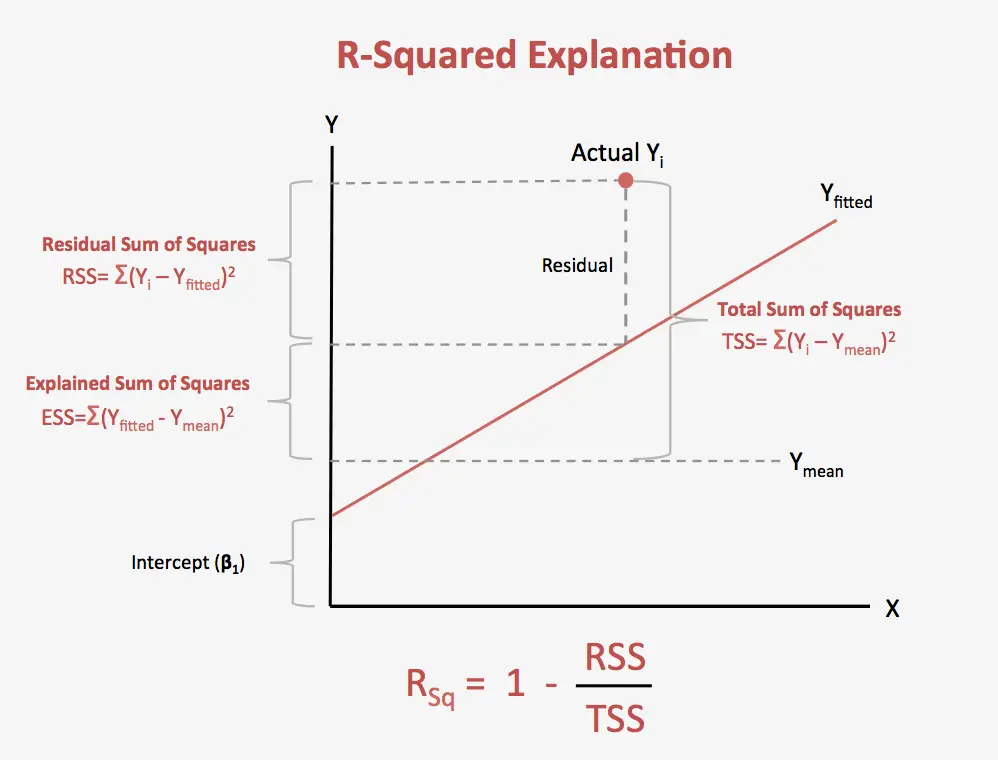

#> Model p-Value: 1.489836e-12R-Squared and Adj R-Squared

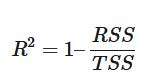

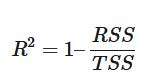

The actual information in a data is the total variation it contains, remember?.

What R-Squared tells us is the proportion of variation in the dependent (response) variable that has been explained by this model.

Remember, the total information in a variable is the amount of variation it contains.

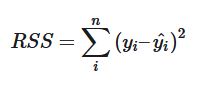

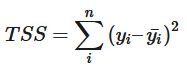

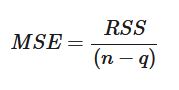

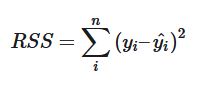

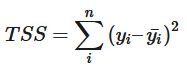

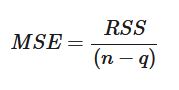

where, RSS is the Residual Sum of Squares given by

and the Sum of Squared Total is given by

Here, y-hat is the fitted value for observation i and y-bar is the mean of Y.

We don�t necessarily discard a model based on a low R-Squared value.

To compare the efficacy of two different regression models, it’s a good practice to use the validation sample to compare the AIC of the two models.

Besides AIC, other evaluation metrics like mean absolute percentage error (MAPE), Mean Squared Error (MSE) and Mean Absolute Error (MAE) can also be used.

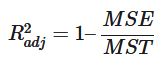

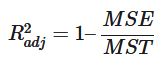

Thats about R-Squared. Now what about adjusted R-Squared?

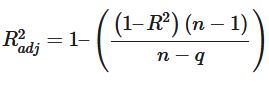

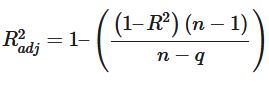

What is adjusted R-Squared?

As you add more X variables to your model, the R-Squared value of the new bigger model will always be greater than that of the smaller subset.

Can you imagine why?

This is because, since all the variables in the original model is also present, their contribution to explain the dependent variable will be present in the super-set as well.

Therefore, whatever new variable you add can only add (if not significantly) to the variation that was already explained.

It is here, the adjusted R-Squared value comes to help.

Adjusted R-Squared is formulated such that it penalises the number of terms (read predictors) in your model.

So unlike R-sq, as the number of predictors in the model increases, the adj-R-sq may not always increase.

Therefore when comparing nested models, it is a good practice to compare using adj-R-squared rather than just R-squared.

where, MSE is the mean squared error given by

where, n is the number of observations and q is the number of coefficients in the model.

Therefore, by moving around the numerators and denominators, the relationship between R2 and Radj2 becomes:

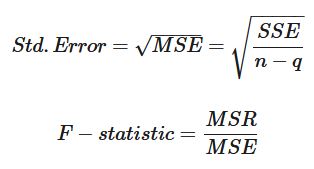

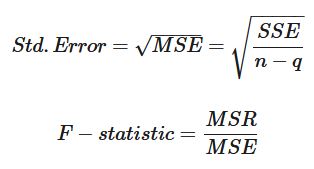

Standard Error and F-Statistic

Both standard errors and F-statistic are measures of goodness of fit.

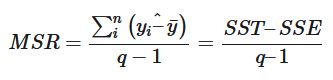

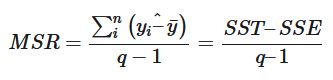

where, n is the number of observations, q is the number of coefficients and MSR is the mean square regression, calculated as,

The higher the F-Statistic the better it is.

What is AIC and BIC?

The Akaike�s information criterion – AIC (Akaike, 1974) and the Bayesian information criterion – BIC (Schwarz, 1978) are measures of the goodness of fit of the linear regression model and can also be used for model selection.

Both criteria depend on the maximised value of the likelihood function L for the estimated model.

The AIC is defined as:

AIC?=?(?2)?�?ln(L)?+?(2�k)

where, k is the number of model parameters and the BIC is defined as:

BIC?=?(?2)?�?ln(L)?+?k?�?ln(n)

where, n is the sample size.

For model comparison, the model with the lowest AIC and BIC score is preferred.

AIC(linearMod)

#=> 419.1569

BIC(linearMod)

#=> BIC => 424.89297. How to know which regression model is best fit for the data?

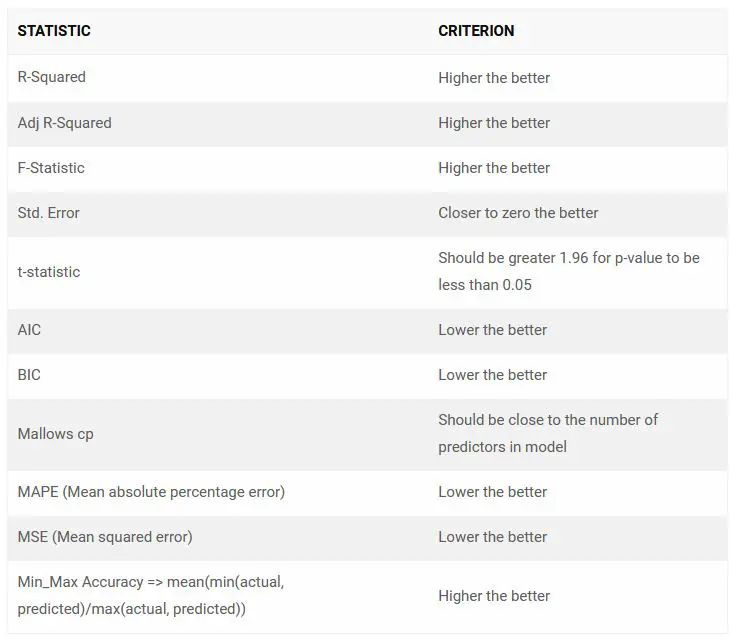

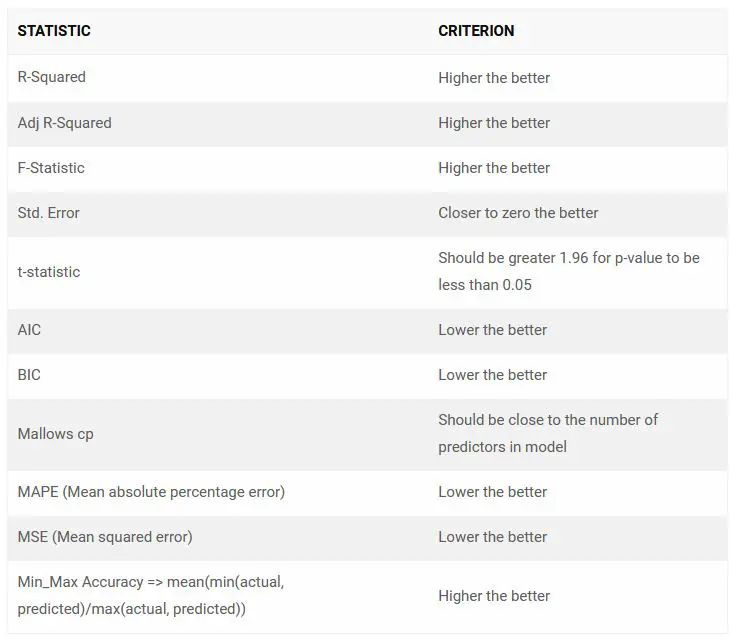

The most common metrics to look at while selecting the model are:

8. Predicting Linear Models

So far you have seen how to build a linear regression model using the whole dataset. If you build it that way, there is no way to tell how the model will perform with new data.

So the preferred practice is to split your dataset into a 80:20 sample (training:test), then, build the model on the 80% sample and then use the model thus built to predict the dependent variable on test data.

Doing it this way, we will have the model predicted values for the 20% data (test) as well as the actuals (from the original dataset).

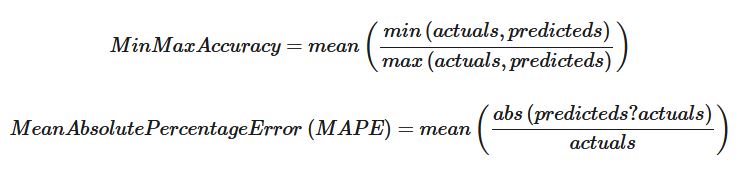

By calculating accuracy measures (like min_max accuracy) and error rates (MAPE or MSE), you can find out the prediction accuracy of the model.

Now, lets see how to actually do this.

Step 1: Create the training and test data

This can be done using the sample() function. Just make sure you set the seed using set.seed() so the samples can be recreated for future use.

# Create Training and Test data -

set.seed(100) # setting seed to reproduce results of random sampling

trainingRowIndex <- sample(1:nrow(cars), 0.8*nrow(cars)) # row indices for training data

trainingData <- cars[trainingRowIndex, ] # model training data

testData <- cars[-trainingRowIndex, ] # test dataStep 2: Fit the model on training data and predict dist on test data

# Build the model on training data

lmMod <- lm(dist ~ speed, data=trainingData) # build the model

distPred <- predict(lmMod, testData) # predict distanceStep 3: Review diagnostic measures.

summary (lmMod) # model summary

#>

#> Call:

#> lm(formula = dist ~ speed, data = trainingData)

#>

#> Residuals:

#> Min 1Q Median 3Q Max

#> -23.350 -10.771 -2.137 9.255 42.231

#>

#> Coefficients:

#> Estimate Std. Error t value Pr(>|t|)

#> (Intercept) -22.657 7.999 -2.833 0.00735 **

#> speed 4.316 0.487 8.863 8.73e-11 ***

#> ---

#> Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

#>

#> Residual standard error: 15.84 on 38 degrees of freedom

#> Multiple R-squared: 0.674, Adjusted R-squared: 0.6654

#> F-statistic: 78.56 on 1 and 38 DF, p-value: 8.734e-11

AIC (lmMod) # Calculate akaike information criterion

#> [1] 338.4489From the model summary, the model p value and predictors p value are less than the significance level.

So you have a statistically significant model.

Also, the R-Sq and Adj R-Sq are comparative to the original model built on full data.

Step 4: Calculate prediction accuracy and error rates

A simple correlation between the actuals and predicted values can be used as a form of accuracy measure.

A higher correlation accuracy implies that the actuals and predicted values have similar directional movement, i.e. when the actuals values increase the predicted values also increase and vice-versa.

actuals_preds <- data.frame(cbind(actuals=testData$dist, predicteds=distPred))# make actuals_predicteds dataframe.correlation_accuracy <- cor(actuals_preds)# 82.7%head(actuals_preds) #> actuals predicteds #> 1 2 -5.392776 #> 4 22 7.555787 #> 8 26 20.504349 #> 20 26 37.769100 #> 26 54 42.085287 #> 31 50 50.717663

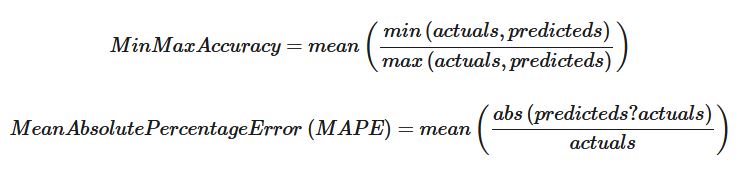

Now lets calculate the Min Max accuracy and MAPE:

# Min-Max Accuracy Calculation

min_max_accuracy <- mean(apply(actuals_preds, 1, min) / apply(actuals_preds, 1, max))

# => 38.00%, min_max accuracy

# MAPE Calculation

mape <- mean(abs((actuals_preds$predicteds - actuals_preds$actuals))/actuals_preds$actuals)

# => 69.95%, mean absolute percentage deviationAlternately, you can compute all the error metrics in one go using the regr.eval() function in DMwR package. You will have to install.packages('DMwR') for this if you are using it for the first time.

DMwR::regr.eval(actuals_preds$actuals, actuals_preds$predicteds)

#=> mae mse rmse mape

#=> 12.0082829 205.9652710 14.3514902 0.69950329. What is k- Fold Cross validation and its Purpose?

Suppose, the model predicts satisfactorily on the 20% split (test data), is that enough to believe that your model will perform equally well all the time?

What do I mean by this?

It is possible that few of the data points are not representative of the population on which the model was built.

For example, in cars dataset, let’s suppose concrete road was used for the road tests on the 80% training data while muddy road was used for the remaining 20% test data.

If the model was trained in such a setup, you cannot expect the model to predict the dist in test dataset with equal accuracy. Simply because, it has not learned the relationship between speed and dist in such a setting.

So, it is important to rigorously test the model�s performance as much as possible.

One way to do this rigorous testing, is to check if the model equation performs equally well, when trained and tested on different distinct chunks of data.

This is exactly what k-Fold cross validation does. Here is how it works:

- Split your data into �k� mutually exclusive random sample portions.

- Then iteratively build k models, keeping one of k-subsets as test data each time.

In each iteration, We build the model on the remaining (k-1 portion) data and calculate the mean squared error of the predictions on the k’th subset.

- Finally, the average of these mean squared errors (for �k� portions) is computed.

You can use this metric to compare different linear models.

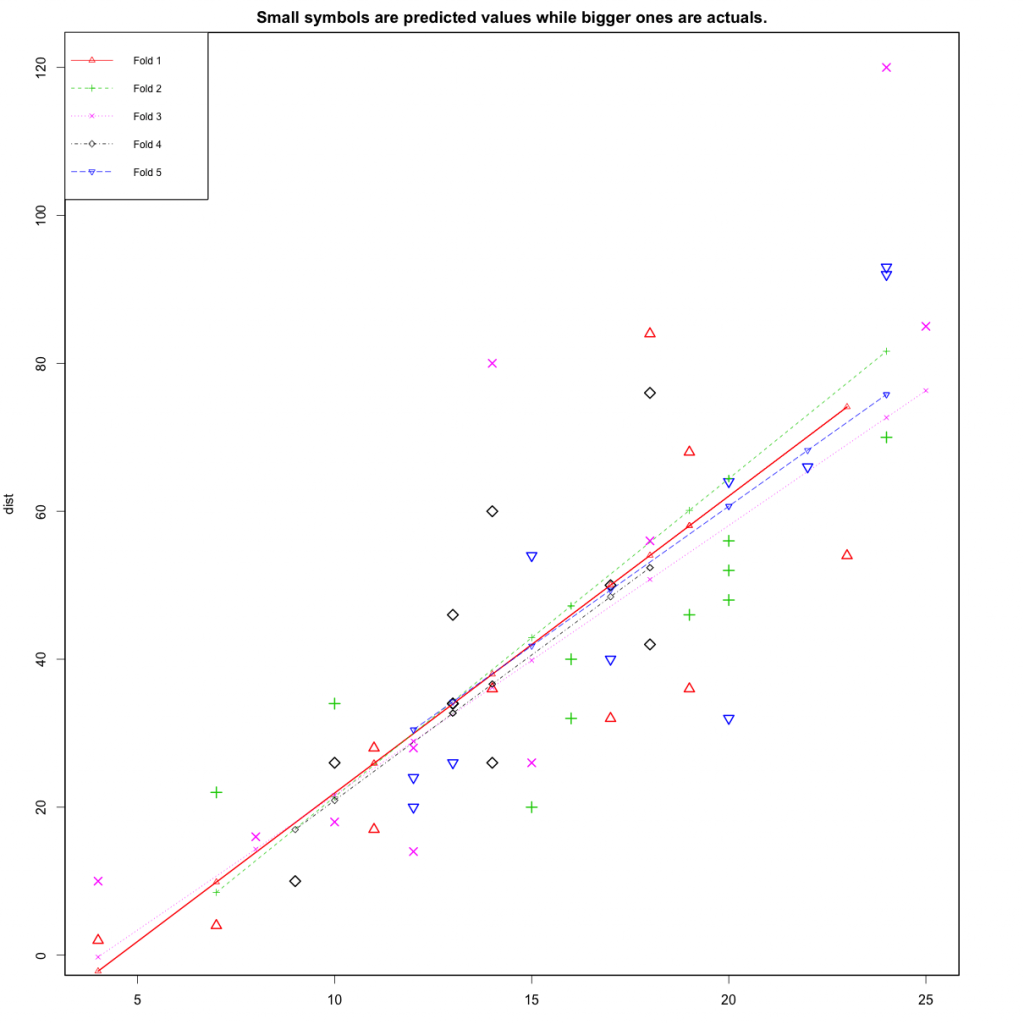

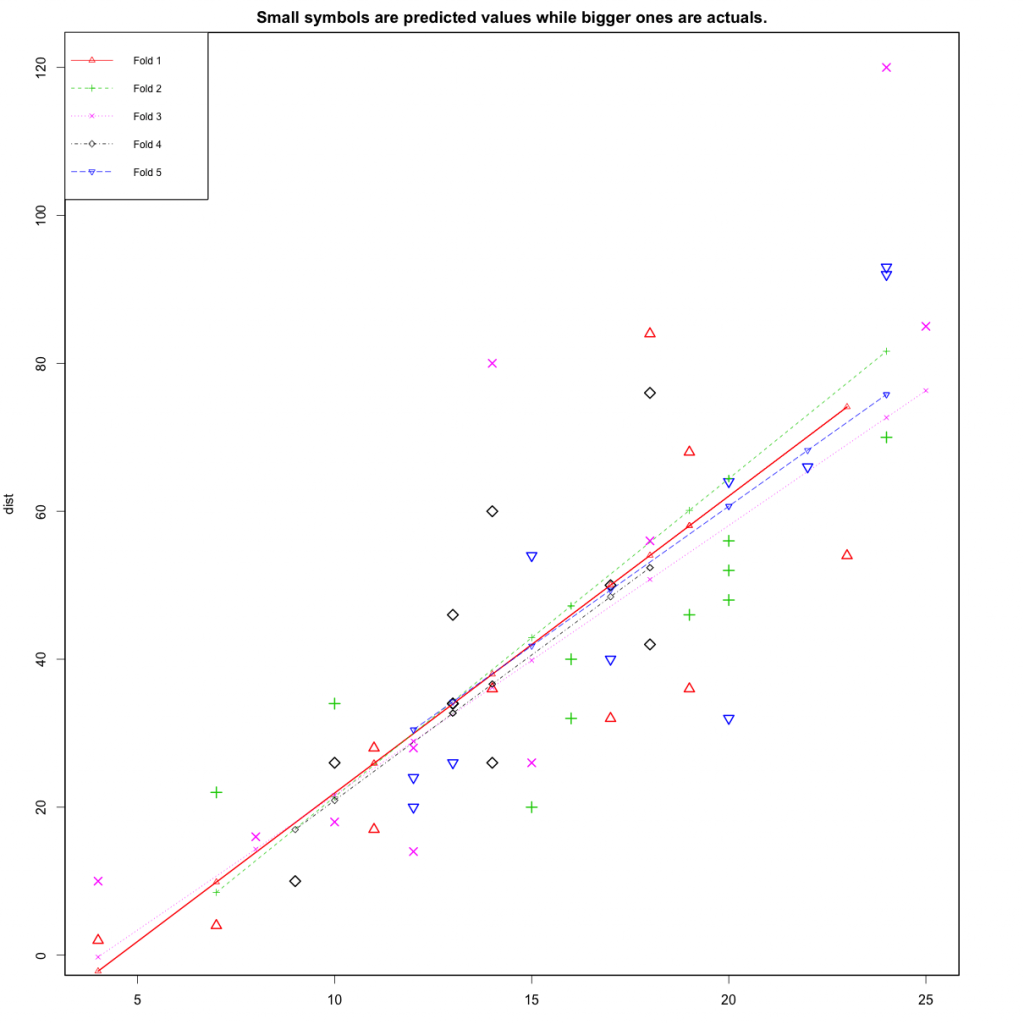

By doing this, you need to check two things from the k-fold predictions:

- If the each of the k-fold model�s prediction accuracy isn�t varying too much for any one particular sample, and

- If the lines of best fit from the k-folds don�t vary too much with respect the the slope and level.

In other words, they should be parallel and as close to each other as possible. This is what the k-fold cross validation plot (below) reveals.

library(DAAG)

cvResults <- suppressWarnings(CVlm(df=cars, form.lm=dist ~ speed, m=5, dots=FALSE, seed=29, legend.pos="topleft", printit=FALSE, main="Small symbols are predicted values while bigger ones are actuals.")); # performs the CV

attr(cvResults, 'ms')

# => 251.2783 mean squared errorIn the below plot, Are the dashed lines parallel? Are the small and big symbols are not over dispersed for one particular color?

10. Where to go from here?

We have covered the basic concepts about linear regression. Besides these, you need to understand that linear regression is based on certain underlying assumptions that must be taken care especially when working with multiple Xs. Once you are familiar with that, the advanced regression models will show you around the various special cases where a different form of regression would be more suitable.

Linear regression is used to predict the value of a continuous variable Y based on one or more input predictor variables X. The aim is to establish a mathematical formula between the the response variable (Y) and the predictor variables (Xs). You can use this formula to predict Y, when only X values are known.

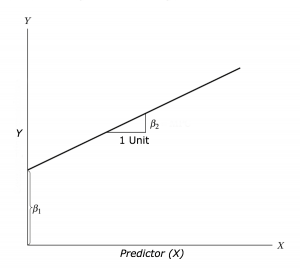

1. Introduction to Linear Regression

Linear regression is one of the most commonly used predictive modelling techniques. The aim of linear regression is to find a mathematical equation for a continuous response variable Y as a function of one or more X variable(s). So that you can use this regression model to predict the Y when only the X is known.

This mathematical equation can be generalised as follows:

Y=β1+β2X+ϵ

where, β1 is the intercept and β2 is the slope.

Collectively, they are called regression coefficients and ? is the error term, the part of Y the regression model is unable to explain.

2. Example Problem

For this analysis, we will use the cars dataset that comes with R by default.

cars is a standard built-in dataset, that makes it convenient to show linear regression in a simple and easy to understand fashion.

You can access this dataset by typing in cars in your R console.

You will find that it consists of 50 observations(rows) and 2 variables (columns) dist and speed. Lets print out the first six observations here.

head(cars) # display the first 6 observations

#> speed dist

#> 1 4 2

#> 2 4 10

#> 3 7 4

#> 4 7 22

#> 5 8 16

#> 6 9 10The goal here is to establish a mathematical equation for dist as a function of speed, so you can use it to predict dist when only the speed of the car is known.

So it is desirable to build a linear regression model with the response variable as dist and the predictor as speed.

Before we begin building the regression model, it is a good practice to analyse and understand the variables.

The graphical analysis and correlation study below will help with this.

3. Graphical Analysis

The aim of this exercise is to build a simple regression model that you can use to predict Distance (dist).

This is possible by establishing a mathematical formula between Distance (dist) and Speed (speed).

But before jumping in to the syntax, lets try to understand these variables graphically.

Typically, for each of the predictors, the following plots help visualise the patterns:

- Scatter plot: Visualise the linear relationship between the predictor and response

- Box plot: To spot any outlier observations in the variable. Having outliers in your predictor can drastically affect the predictions as they can affect the direction/slope of the line of best fit.

- Density plot: To see the distribution of the predictor variable. Ideally, a close to normal distribution (a bell shaped curve), without being skewed to the left or right is preferred.

Let us see how to make each one of them.

Using Scatter Plot To Visualise The Relationship

Scatter plots can help visualise linear relationships between the response and predictor variables.

Ideally, if you have many predictor variables, a scatter plot is drawn for each one of them against the response, along with the line of best fit as seen below.

scatter.smooth(x=cars$speed, y=cars$dist, main="Dist ~ Speed") # scatterplot

The scatter plot along with the smoothing line above suggests a linear and positive relationship between the dist and speed.

This is a good thing.

Because, one of the underlying assumptions of linear regression is, the relationship between the response and predictor variables is linear and additive.

Using BoxPlot To Check For Outliers

Generally, an outlier is any datapoint that lies outside the 1.5 * inter quartile range (IQR).

IQR is calculated as the distance between the 25th percentile and 75th percentile values for that variable.

par(mfrow=c(1, 2)) # divide graph area in 2 columns

boxplot(cars$speed, main="Speed", sub=paste("Outlier rows: ", boxplot.stats(cars$speed)$out)) # box plot for 'speed'

boxplot(cars$dist, main="Distance", sub=paste("Outlier rows: ", boxplot.stats(cars$dist)$out)) # box plot for 'distance'

Using Density Plot To Check If Response Variable Is Close To Normal

library(e1071) # for skewness function

par(mfrow=c(1, 2)) # divide graph area in 2 columns

plot(density(cars$speed), main="Density Plot: Speed", ylab="Frequency", sub=paste("Skewness:", round(e1071::skewness(cars$speed), 2))) # density plot for 'speed'

polygon(density(cars$speed), col="red")

plot(density(cars$dist), main="Density Plot: Distance", ylab="Frequency", sub=paste("Skewness:", round(e1071::skewness(cars$dist), 2))) # density plot for 'dist'

polygon(density(cars$dist), col="red")

4. What is Correlation Analysis?

Correlation analysis studies the strength of relationship between two continuous variables. It involves computing the correlation coefficient between the the two variables.

So what is correlation? And how is it helpful in linear regression?

Correlation is a statistical measure that shows the degree of linear dependence between two variables.

In order to compute correlation, the two variables must occur in pairs, just like what we have here with speed and dist.

Correlation can take values between -1 to +1.

If one variables consistently increases with increasing value of the other, then they have a strong positive correlation (value close to +1).

Similarly, if one consistently decreases when the other increase, they have a strong negative correlation (value close to -1).

A value closer to 0 suggests a weak relationship between the variables.

A low correlation (-0.2 < x < 0.2) probably suggests that much of variation of the response variable (Y) is unexplained by the predictor (X). In that case, you should probably look for better explanatory variables.

If you observe the cars dataset in the R console, for every instance where speed increases, the distance also increases along with it.

That means, there is a strong positive relationship between them. So, the correlation between them will be closer to 1.

However, correlation doesn’t imply causation.

In other words, if two variables have high correlation, it does not mean one variable ’causes’ the value of the other variable to increase.

Correlation is only an aid to understand the relationship. You can only rely on logic and business reasoning to make that judgement.

So, how to compute correlation in R?

Simply use the cor() function with the two numeric variables as arguments.

cor(cars$speed, cars$dist) # calculate correlation between speed and distance

#> [1] 0.80689495. Building the Linear Regression Model

Now that you have seen the linear relationship pictorially in the scatter plot and through correlation, let’s try building the linear regression model.

The function used for building linear models is lm().

The lm() function takes in two main arguments:

- Formula

- Data

The data is typically a data.frame object and the formula is a object of class formula.

But the most common convention is to write out the formula directly as written below.

linearMod <- lm(dist ~ speed, data=cars) # build linear regression model on full data

print(linearMod)

#> Call:

#> lm(formula = dist ~ speed, data = cars)

#>

#> Coefficients:

#> (Intercept) speed

#> -17.579 3.932By building the linear regression model, we have established the relationship between the predictor and response in the form of a mathematical formula.

That is Distance (dist) as a function for speed.

For the above output, you can notice the �Coefficients� part having two components: Intercept: -17.579, speed: 3.932.

These are also called the beta coefficients. In other words,

dist?=?Intercept?+?(????speed)

=> dist = ?17.579 + 3.932?speed

6. Linear Regression Diagnostics

Now the linear model is built and you have a formula that you can use to predict the dist value if a corresponding speed is known.

Is this enough to actually use this model? NO!

Because, before using a regression model to make predictions, you need to ensure that it is statistically significant. But How do you ensure this?

Lets begin by printing the summary statistics for linearMod.

summary(linearMod) # model summary

#> Call:

#> lm(formula = dist ~ speed, data = cars)

#>

#> Residuals:

#> Min 1Q Median 3Q Max

#> -29.069 -9.525 -2.272 9.215 43.201

#>

#> Coefficients:

#> Estimate Std. Error t value Pr(>|t|)

#> (Intercept) -17.5791 6.7584 -2.601 0.0123 *

#> speed 3.9324 0.4155 9.464 1.49e-12 ***

#> ---

#> Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

#>

#> Residual standard error: 15.38 on 48 degrees of freedom

#> Multiple R-squared: 0.6511, Adjusted R-squared: 0.6438

#> F-statistic: 89.57 on 1 and 48 DF, p-value: 1.49e-12Using p Value To Check For Statistical Significance

The summary statistics above tells us a number of things.

One of them is the model’s p-Value (in last line) and the p-Value of individual predictor variables (extreme right column under �Coefficients�).

The p-Values are very important.

Because, we can consider a linear model to be statistically significant only when both these p-Values are less than the pre-determined statistical significance level of 0.05.

This can visually interpreted by the significance stars at the end of the row against each X variable.

The more the stars beside the variable�s p-Value, the more significant the variable.

What is the Null and Alternate Hypothesis?

Whenever there is a p-value, there is always a Null and Alternate Hypothesis associated.

So what is the null hypothesis in this case?

In Linear Regression, the Null Hypothesis (H0) is that the beta coefficients associated with the variables is equal to zero.

The alternate hypothesis (H1) is that the coefficients are not equal to zero. (i.e. there exists a relationship between the independent variable in question and the dependent variable).

What is t-value?

We can interpret the t-value something like this. A larger t-value indicates that it is less likely that the coefficient is not equal to zero purely by chance. So, higher the t-value, the better.

Pr(>|t|) or p-value is the probability that you get a t-value as high or higher than the observed value when the Null Hypothesis (the ? coefficient is equal to zero or that there is no relationship) is true.

So if the Pr(>|t|) is low, the coefficients are significant (significantly different from zero). If the Pr(>|t|) is high, the coefficients are not significant.

What this means to us?

When p Value is less than significance level (< 0.05), you can safely reject the null hypothesis that the co-efficient ? of the predictor is zero.

In our case, linearMod, both these p-Values are well below the 0.05 threshold.

So, you can reject the null hypothesis and conclude the model is indeed statistically significant.

It is very important for the model to be statistically significant before you can go ahead and use it to predict the dependent variable. Otherwise, the confidence in predicted values from that model reduces and may be construed as an event of chance.

How to calculate the t Statistic and p-Values?

When the model co-efficients and standard error are known, the formula for calculating t Statistic and p-Value is as follows:

t?Statistic=??coefficientStd.Error

In case you want to compute some of the statistics by manual code, the below snippet shows how.

# capture model summary as an object

modelSummary <- summary(linearMod)

# model coefficients

modelCoeffs <- modelSummary$coefficients

# get beta estimate for speed

beta.estimate <- modelCoeffs["speed", "Estimate"]

# get std.error for speed

std.error <- modelCoeffs["speed", "Std. Error"]

# calc t statistic

t_value <- beta.estimate/std.error

# calc p Value

p_value <- 2*pt(-abs(t_value), df=nrow(cars)-ncol(cars))

# fstatistic

f_statistic <- linearMod$fstatistic[1]

# parameters for model p-value calc

f <- summary(linearMod)$fstatistic

model_p <- pf(f[1], f[2], f[3], lower=FALSE)

#> t Value: 9.46399

#> p Value: 1.489836e-12

#> Model F Statistic: 89.56711 1 48

#> Model p-Value: 1.489836e-12R-Squared and Adj R-Squared

The actual information in a data is the total variation it contains, remember?.

What R-Squared tells us is the proportion of variation in the dependent (response) variable that has been explained by this model.

Remember, the total information in a variable is the amount of variation it contains.

where, RSS is the Residual Sum of Squares given by

and the Sum of Squared Total is given by

Here, y-hat is the fitted value for observation i and y-bar is the mean of Y.

We don�t necessarily discard a model based on a low R-Squared value.

To compare the efficacy of two different regression models, it’s a good practice to use the validation sample to compare the AIC of the two models.

Besides AIC, other evaluation metrics like mean absolute percentage error (MAPE), Mean Squared Error (MSE) and Mean Absolute Error (MAE) can also be used.

Thats about R-Squared. Now what about adjusted R-Squared?

What is adjusted R-Squared?

As you add more X variables to your model, the R-Squared value of the new bigger model will always be greater than that of the smaller subset.

Can you imagine why?

This is because, since all the variables in the original model is also present, their contribution to explain the dependent variable will be present in the super-set as well.

Therefore, whatever new variable you add can only add (if not significantly) to the variation that was already explained.

It is here, the adjusted R-Squared value comes to help.

Adjusted R-Squared is formulated such that it penalises the number of terms (read predictors) in your model.

So unlike R-sq, as the number of predictors in the model increases, the adj-R-sq may not always increase.

Therefore when comparing nested models, it is a good practice to compare using adj-R-squared rather than just R-squared.

where, MSE is the mean squared error given by

where, n is the number of observations and q is the number of coefficients in the model.

Therefore, by moving around the numerators and denominators, the relationship between R2 and Radj2 becomes:

Standard Error and F-Statistic

Both standard errors and F-statistic are measures of goodness of fit.

where, n is the number of observations, q is the number of coefficients and MSR is the mean square regression, calculated as,

The higher the F-Statistic the better it is.

What is AIC and BIC?

The Akaike�s information criterion – AIC (Akaike, 1974) and the Bayesian information criterion – BIC (Schwarz, 1978) are measures of the goodness of fit of the linear regression model and can also be used for model selection.

Both criteria depend on the maximised value of the likelihood function L for the estimated model.

The AIC is defined as:

AIC?=?(?2)?�?ln(L)?+?(2�k)

where, k is the number of model parameters and the BIC is defined as:

BIC?=?(?2)?�?ln(L)?+?k?�?ln(n)

where, n is the sample size.

For model comparison, the model with the lowest AIC and BIC score is preferred.

AIC(linearMod)

#=> 419.1569

BIC(linearMod)

#=> BIC => 424.89297. How to know which regression model is best fit for the data?

The most common metrics to look at while selecting the model are:

8. Predicting Linear Models

So far you have seen how to build a linear regression model using the whole dataset. If you build it that way, there is no way to tell how the model will perform with new data.

So the preferred practice is to split your dataset into a 80:20 sample (training:test), then, build the model on the 80% sample and then use the model thus built to predict the dependent variable on test data.

Doing it this way, we will have the model predicted values for the 20% data (test) as well as the actuals (from the original dataset).

By calculating accuracy measures (like min_max accuracy) and error rates (MAPE or MSE), you can find out the prediction accuracy of the model.

Now, lets see how to actually do this.

Step 1: Create the training and test data

This can be done using the sample() function. Just make sure you set the seed using set.seed() so the samples can be recreated for future use.

# Create Training and Test data -

set.seed(100) # setting seed to reproduce results of random sampling

trainingRowIndex <- sample(1:nrow(cars), 0.8*nrow(cars)) # row indices for training data

trainingData <- cars[trainingRowIndex, ] # model training data

testData <- cars[-trainingRowIndex, ] # test dataStep 2: Fit the model on training data and predict dist on test data

# Build the model on training data

lmMod <- lm(dist ~ speed, data=trainingData) # build the model

distPred <- predict(lmMod, testData) # predict distanceStep 3: Review diagnostic measures.

summary (lmMod) # model summary

#>

#> Call:

#> lm(formula = dist ~ speed, data = trainingData)

#>

#> Residuals:

#> Min 1Q Median 3Q Max

#> -23.350 -10.771 -2.137 9.255 42.231

#>

#> Coefficients:

#> Estimate Std. Error t value Pr(>|t|)

#> (Intercept) -22.657 7.999 -2.833 0.00735 **

#> speed 4.316 0.487 8.863 8.73e-11 ***

#> ---

#> Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

#>

#> Residual standard error: 15.84 on 38 degrees of freedom

#> Multiple R-squared: 0.674, Adjusted R-squared: 0.6654

#> F-statistic: 78.56 on 1 and 38 DF, p-value: 8.734e-11

AIC (lmMod) # Calculate akaike information criterion

#> [1] 338.4489From the model summary, the model p value and predictors p value are less than the significance level.

So you have a statistically significant model.

Also, the R-Sq and Adj R-Sq are comparative to the original model built on full data.

Step 4: Calculate prediction accuracy and error rates

A simple correlation between the actuals and predicted values can be used as a form of accuracy measure.

A higher correlation accuracy implies that the actuals and predicted values have similar directional movement, i.e. when the actuals values increase the predicted values also increase and vice-versa.

actuals_preds <- data.frame(cbind(actuals=testData$dist, predicteds=distPred))# make actuals_predicteds dataframe.correlation_accuracy <- cor(actuals_preds)# 82.7%head(actuals_preds) #> actuals predicteds #> 1 2 -5.392776 #> 4 22 7.555787 #> 8 26 20.504349 #> 20 26 37.769100 #> 26 54 42.085287 #> 31 50 50.717663

Now lets calculate the Min Max accuracy and MAPE:

# Min-Max Accuracy Calculation

min_max_accuracy <- mean(apply(actuals_preds, 1, min) / apply(actuals_preds, 1, max))

# => 38.00%, min_max accuracy

# MAPE Calculation

mape <- mean(abs((actuals_preds$predicteds - actuals_preds$actuals))/actuals_preds$actuals)

# => 69.95%, mean absolute percentage deviationAlternately, you can compute all the error metrics in one go using the regr.eval() function in DMwR package. You will have to install.packages('DMwR') for this if you are using it for the first time.

DMwR::regr.eval(actuals_preds$actuals, actuals_preds$predicteds)

#=> mae mse rmse mape

#=> 12.0082829 205.9652710 14.3514902 0.69950329. What is k- Fold Cross validation and its Purpose?

Suppose, the model predicts satisfactorily on the 20% split (test data), is that enough to believe that your model will perform equally well all the time?

What do I mean by this?

It is possible that few of the data points are not representative of the population on which the model was built.

For example, in cars dataset, let’s suppose concrete road was used for the road tests on the 80% training data while muddy road was used for the remaining 20% test data.

If the model was trained in such a setup, you cannot expect the model to predict the dist in test dataset with equal accuracy. Simply because, it has not learned the relationship between speed and dist in such a setting.

So, it is important to rigorously test the model�s performance as much as possible.

One way to do this rigorous testing, is to check if the model equation performs equally well, when trained and tested on different distinct chunks of data.

This is exactly what k-Fold cross validation does. Here is how it works:

- Split your data into �k� mutually exclusive random sample portions.

- Then iteratively build k models, keeping one of k-subsets as test data each time.

In each iteration, We build the model on the remaining (k-1 portion) data and calculate the mean squared error of the predictions on the k’th subset.

- Finally, the average of these mean squared errors (for �k� portions) is computed.

You can use this metric to compare different linear models.

By doing this, you need to check two things from the k-fold predictions:

- If the each of the k-fold model�s prediction accuracy isn�t varying too much for any one particular sample, and

- If the lines of best fit from the k-folds don�t vary too much with respect the the slope and level.

In other words, they should be parallel and as close to each other as possible. This is what the k-fold cross validation plot (below) reveals.

library(DAAG)

cvResults <- suppressWarnings(CVlm(df=cars, form.lm=dist ~ speed, m=5, dots=FALSE, seed=29, legend.pos="topleft", printit=FALSE, main="Small symbols are predicted values while bigger ones are actuals.")); # performs the CV

attr(cvResults, 'ms')

# => 251.2783 mean squared errorIn the below plot, Are the dashed lines parallel? Are the small and big symbols are not over dispersed for one particular color?

10. Where to go from here?

We have covered the basic concepts about linear regression. Besides these, you need to understand that linear regression is based on certain underlying assumptions that must be taken care especially when working with multiple Xs. Once you are familiar with that, the advanced regression models will show you around the various special cases where a different form of regression would be more suitable.