Conversational AI systems have revolutionized over the decade. Almost every company faces the requirement to use a Chat Bot. Rasa provides a smooth and competitive way to build your own Chat bot. This article will guide you on how to develop your Bot step-by-step simultaneously explaining the concept behind it.

Introduction

I’m sure each of us would have interacted with a bot, sometimes without even realizing! We are in the era of Conversational AI now. Every website uses a Chat bot to interact with the users and help them out. This has proven to reduce the time and resources to a great extent. At the same time, bots that keep sending ” Sorry I did not get you ” just irritate us. You need to ensure that the performance is satisfactory too.

Rasa open source provides an advanced and smooth way to build your own chat bot that can provide satisfactory interaction. In this article, I shall guide you on how to build a Chat bot using Rasa with a real example.

Understanding the objective of our ChatBot

First step is to be clear about what you want. You need to have an idea about the functions you expect your Bot to perform. This includes spectating about the possible user inputs and how the Bot should answer it. Here, we aim to build a Bot for a cafe. What should my Bot do ?

Here are my expectations. The Bot should greet the User, and find out what they want. It should provide a menu and take orders, handle enquires. Many times we may receive complaints too, which have to be taken graciously. You should also think of the flow of conversation you desire. Rasa provides a smooth way to implement each part of this. In the next section, let’s learn more about how Rasa Open Source works.

How does Rasa work ?

Rasa is an open-source tool that lets you create a whole range of Bots for different purposes. The best feature of Rasa is that it provides different frameworks to handle different tasks. This makes the process elegant and efficient.

Let’s divide the tasks of Chat bot into two main groups :

- Understand the question of the user, what answer he is expecting .

-

Maintain the conversation flow, i.e reply to him based on the question/query and converse further.

Rasa provides two amazing frameworks to handle these tasks separately, Rasa NLU and Rasa Core. In simple terms, Rasa NLU and Rasa Core are the two pillars of our ChatBot. For our case, I will be using both NLU and Core, though it is not compulsory. Let’s first understand and develop the NLU part and then proceed to the Core part.

What does Rasa NLU do ?

NLU stands for Natural Language Understanding. As the name hints, it is responsible for understanding the input messages of the user. It should recognize what the user wants and also extract information like names, places from the messages. Let’s look at some common terminologies :

- Intent : Identifying the intent/purpose of the user’s meassage. For example, consider “Can I order food now ?” is the input message. The intent of this message is to place order. NLU will have to rightly identify this. This is termed as Intent classification.

-

Entity : Input messages may contain information like name ,place etc.. These are details which need to be extracted. For example, consider “I want to book a table on the name of Sinduja”. You need extract the name “Sinduja” which is an entity.

Overall, Rasa NLU performs Intent Classification and Entity Extraction.

Now that we have an idea of what NLU does, let’s see how to code it.

First step would be to install and import as shown below

import sys

python = sys.executable

# In your environment run:

!{python} -m pip install -U rasa_core==0.9.6 rasa_nlu[spacy];

!{python} -m spacy download en_core_web_md

import rasa_nlu

import rasa_core

import spacy

The importing is done. Next for performing NLU, you’ll have to train it.

How to write the NLU training data ?

As discussed in previous sections, NLU’s first task is intent classifications. So, you need to tell your Chatbot what are the intents to expect. We’ll be defining various intents and providing examples to each. This will be our training data.

In our case of Cafe Bot, the possible intents of the user will be too order food, choosing item from menu, enquiring about the order, registering complaints, etc. The format is shown below:

intent : name_of_the_intent

-example1

-example2

I have choosen 7 intents. You can add more as per your requirements! After writing the training data in above format, save it to a file named nlu.md for Rasa to recognize. The below code demonstrates this.

# Writing various intents with examples to nlu.md file

nlu_md = """

## intent:greet

- hey

- hello there

- hi

- hello there

- good morning

- good evening

- moin

- hey there

- let's go

- hey dude

- goodmorning

- goodevening

- good afternoon

## intent:goodbye

- good by

- cee you later

- good night

- good afternoon

- bye

- goodbye

- have a nice day

- see you around

- bye bye

- see you later

## intent:order

- Are you open

- Can I order

- I want to order

- Will you deliver ?

- I want to place order

- Is food available now ?

- Can I order now ?

## intent:choosing_item

- 1

- 2

- 3

- 4

- 5

- 6

- 7

## intent:order_enquiry

- How long will it take ?

- How much time ?

- When can I expect the order

- Will it take long ?

- I am waiting for the delivery

- Who will deliver

- I am hungry

## intent:complain

- food was bad

- poor quality

- It was bad

- very bad taste

- poor taste

- there was a fly in it

- It was not hygienic

- Not clean

- poor packaging

- I won't order again

- bad

- terrible

- wrong food delivered

- I didn't order this

## intent:suggest

- Make it more spicy

- You can improve actually

- You can improve the taste

- you can expand the menu I guess

- Add more dishes to the menu

- speed delivery would be better

- try to reduce time

- Add continental dishes too!

- Hope you'll take less time hereafter

"""

%store nlu_md > nlu.md

Writing 'nlu_md' (str) to file 'nlu.md'.

The training data should now be stored in the nlu.md file in same directory . Make sure of this before proceeding to next part

How to define NLU Configuration

When a user sends a message, it’s passed through the NLU pipeline of Rasa. Pipeline consists of a sequence of components which perform various tasks. The first component is usually the tokenizer responsible for breaking the message into tokens. I have chosen tokenizer_spacy for that purpose here, as we are using a pretrained spaCy model.

Next, we use the ner_crf component responsible for entity extraction, if present. After that , you need to get a vector representation of our input message . For this I have used intent_featurizer_spacy. All these are components of NLU pipeline which need to added in the configuration file

The below code shows depicts the format of how to do it.

# Adding the NLU components to the pipeline in config.yml file

config = """

language: "en_core_web_md"

pipeline:

- name: "nlp_spacy" # loads the spacy language model

- name: "tokenizer_spacy" # splits the sentence into tokens

- name: "ner_crf" # uses the pretrained spacy NER model

- name: "intent_featurizer_spacy" # transform the sentence into a vector representation

- name: "intent_classifier_sklearn" # uses the vector representation to classify using SVM

- name: "ner_synonyms" # trains the synonyms

"""

%store config > config.yml

Writing 'config' (str) to file 'config.yml'.

At the end, the code has been stored to the config.yml using the %store command. Now the configuration is ready too!

Training the NLU Model

Once the nlu.md andconfig.yml files are ready, it’s time to train the NLU Model. You can import the load_data() function from rasa_nlu.training_data module. By passing nlu.md file to the above function, the training_data gets extracted. Similarly, import and use the config module from rasa_nlu to read the configuration settings into the trainer. After this , the trainer is trained with the previously extracted training_data to create an interpreter.

# Import modules for training

from rasa_nlu.training_data import load_data

from rasa_nlu.config import RasaNLUModelConfig

from rasa_nlu.model import Trainer

from rasa_nlu import config

# loading the nlu training samples

training_data = load_data("nlu.md")

trainer = Trainer(config.load("config.yml"))

# training the nlu

interpreter = trainer.train(training_data)

model_directory = trainer.persist("./models/nlu", fixed_model_name="current")

INFO:rasa_nlu.training_data.loading:Training data format of nlu.md is md

INFO:rasa_nlu.training_data.training_data:Training data stats:

- intent examples: 67 (7 distinct intents)

- Found intents: 'order_enquiry', 'goodbye', 'greet', 'complain', 'choosing_item', 'order', 'suggest'

- entity examples: 0 (0 distinct entities)

- found entities:

.

INFO:rasa_nlu.model:Starting to train component SpacyNLP

INFO:rasa_nlu.model:Finished training component.

INFO:rasa_nlu.model:Starting to train component SpacyTokenizer

INFO:rasa_nlu.model:Finished training component.

INFO:rasa_nlu.model:Starting to train component CRFEntityExtractor

INFO:rasa_nlu.model:Finished training component.

INFO:rasa_nlu.model:Starting to train component SpacyFeaturizer

INFO:rasa_nlu.model:Finished training component.

INFO:rasa_nlu.model:Starting to train component SklearnIntentClassifier

[Parallel(n_jobs=1)]: Done 12 out of 12 | elapsed: 0.1s finished

INFO:rasa_nlu.model:Finished training component.

INFO:rasa_nlu.model:Starting to train component EntitySynonymMapper

INFO:rasa_nlu.model:Finished training component.

INFO:rasa_nlu.model:Successfully saved model into '/content/models/nlu/default/current'

Fitting 2 folds for each of 6 candidates, totalling 12 fits

How to test the NLU

The training of the NLU model interpreter is complete now. Let’s check how the model finds the intent of any message of the user.

# Testing the NLU model with an input message

import json

def pprint(o):

print(json.dumps(o, indent=2))

pprint(interpreter.parse("hi"))

{

"intent": {

"name": "greet",

"confidence": 0.7448749668923197

},

"entities": [],

"intent_ranking": [

{

"name": "greet",

"confidence": 0.7448749668923197

},

{

"name": "goodbye",

"confidence": 0.18327848890200757

},

{

"name": "suggest",

"confidence": 0.0384674336166317

},

{

"name": "order",

"confidence": 0.017664921215443368

},

{

"name": "order_enquiry",

"confidence": 0.009610814213655755

},

{

"name": "complain",

"confidence": 0.006103375159941932

}

],

"text": "hi"

}

Observe the above output. You can see the model predicting the confidence of each intent. This depicts how sure the model is about the particular intent being right. For example for the input “hi”, the model predicts intent “greet” with a confidence of 0.7448749668923197. This means the model is 74.48..% sure that the user’s intent is to greet.

We can see that the NLU has worked up to our expectations. Next, let’s move on to the Rasa Core part in the upcoming section.

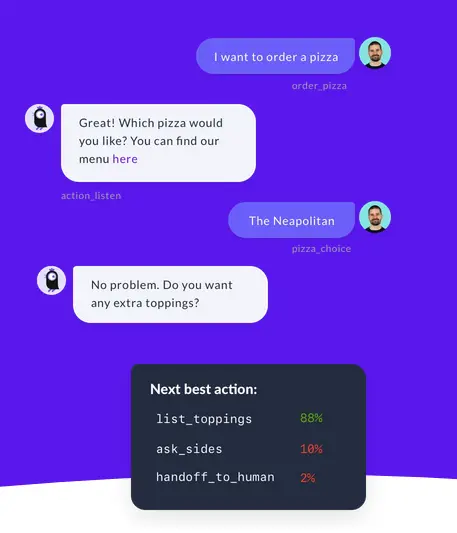

What does Rasa Core do ?

The NLU has made sure that our Bot understands the requirement of the user. The next part is the Bot should respond appropriately to the message. This is handled by Rasa Core. It maintains the conversation flow between the User and the Bot.

For example, if the user’s intent has been identified as place_order, then the Bot should send the menu to the user. It should wait for the user to choose and then confirm the order. Like this, the Bot should perform certain actions in sequence. Before plunging into details, let me introduce you to some important terms

- actions : For every intent recognized by the Bot, there should be some defined action. It may be outputing a text, or a link or even a whole function being perfomed. The responses the Bot is trained to perform are called as actions. For example, for the intent

place_order, the action will beutter_menu. -

stories : A story is path which defines a sequence of intents and actions. Stories provide a sample interaction between the user and Bot in terms of intent and action taken by the bot. For example, if the user greets, the bot should greet back and ask how to help her. This is a story.

-

templates : Here, we actually define all the actions. The text for each action is written. You’ll see the format and example in next section.

-

slots: They are the bot’s memory. They store the values that enable the bot to keep a track of the conversations

Now that you have an understanding of Rasa Core, let’s get to the code in next section

How to write Stories ?

In the previous section, we discussed what stories are. It basically should have sample interactions between the user and the Bot. In our case, the possible story paths will include greeting, taking the order, taking enquires and registering complaints. The format of each story should be :

## Name of the story

* intent_name_1

- action_name_1

* intent_name_2

- action_name_2

After writing the stories, you’ll have to store it in the stories.md file using the %store command. The below codes depicts the stories for our cafe bot.

# Writing stories and saving it in the stories.md file

stories_md = """

## Greeting path

* greet

- utter_greet

## Order path

* order

- utter_menu

* choosing_item

- utter_order_received

## Enquires path

* order_enquiry

- utter_enquiry

## complain path

* complain

- utter_receive_complaint

## suggestion path

* suggest

- utter_take_suggestion

## say goodbye

* goodbye

- utter_goodbye

"""

%store stories_md > stories.md

Writing 'stories_md' (str) to file 'stories.md'.

How to define the Domain

This domain is a file that consists of all the intents, entities, actions, slots and templates. This is like a concluding piece where all the files written get linked. Let’s see how to write the domain file for our cafe Bot in the below code. After writing, the code is stored to domain.yml file.

# Writing all the intents,slots,entities,actions and templates to domain.yml

domain_yml = """

intents:

- greet

- goodbye

- order

- choosing_item

- order_enquiry

- complain

- suggest

slots:

group:

type: text

entities:

- group

actions:

- utter_greet

- utter_menu

- utter_order_received

- utter_enquiry

- utter_receive_complaint

- utter_take_suggestion

- utter_goodbye

templates:

utter_greet:

- text: "Hey! Welcome to Biker's cafe . How may I help you ?"

utter_menu:

- text: "Yes, we are open 24 X 7. Here's our menu. 1. Chicken popcorn 2. White sauce pasta 3. Macroni 4. Lasagna 5. Pizza 6. Taco 7. Garlic breadsticks 8. coke (500 ml). Enter 1 if you want popcorn, 5 for Pizza and so on"

utter_order_received:

- text: "Great choice ! Your order has been confirmed . Wait while our top chefs prepare your delicious food !"

utter_goodbye:

- text: " Thankyou for choosing us. Waiting for your next visit "

utter_enquiry:

- text: " Your food is being prepared. It usually takes 25-30 minutes "

utter_receive_complaint:

- text: " Sorry that you faced trouble. We have noted your issues and it shall not be repeated again"

utter_take_suggestion:

- text: " Sure , we shall take into account . Thanks for suggestion "

"""

%store domain_yml > domain.yml

Writing 'domain_yml' (str) to file 'domain.yml'.

The above file will be used in the next section for final training of the Bot.

Training the Dialogue Model

The time has come to train the dialogue model. For this, you can import various policies as per requirements. For the purpose of predicting the next action, you can use MemoizationPolicy. It predicts the action with a confidence of either 1 or 0 based on previous example text available. Another policy for this purpose is KerasPolicy which works based on an LSTM model. There are various other policies serving different purposes. To know more, check out the link here

After importing the necessary policies, you need to import the Agent for loading the data and training . The domain.yml file has to be passed as input to Agent() function along with the choosen policy names. The function would return the model agent, which is trained with the data available in stories.md. The below code clearly depicts the training process.

# Import the policies and agent

from rasa_core.policies import FallbackPolicy, MemoizationPolicy,KerasPolicy

from rasa_core.agent import Agent

# Initialize the model with `domain.yml`

agent = Agent('domain.yml', policies=[MemoizationPolicy(), KerasPolicy()])

# loading our training dialogues from `stories.md`

training_data = agent.load_data('stories.md')

# Training the model

agent.train(

training_data,

validation_split=0.0,

epochs=200

)

agent.persist('models/dialogue')

Processed Story Blocks: 100%|██████████| 6/6 [00:00<00:00, 771.82it/s, # trackers=1]

Processed Story Blocks: 100%|██████████| 6/6 [00:00<00:00, 446.46it/s, # trackers=6]

Processed Story Blocks: 100%|██████████| 6/6 [00:00<00:00, 225.80it/s, # trackers=20]

Processed Story Blocks: 100%|██████████| 6/6 [00:00<00:00, 269.85it/s, # trackers=20]

INFO:rasa_core.featurizers:Creating states and action examples from collected trackers (by MaxHistoryTrackerFeaturizer)...

Processed trackers: 100%|██████████| 258/258 [00:02<00:00, 128.95it/s, # actions=351]

INFO:rasa_core.featurizers:Created 351 action examples.

Processed actions: 351it [00:00, 1183.42it/s, # examples=351]

INFO:rasa_core.policies.memoization:Memorized 351 unique action examples.

INFO:rasa_core.agent:Model directory models/dialogue exists and contains old model files. All files will be overwritten.

INFO:rasa_core.agent:Persisted model to '/content/models/dialogue'

Talking to the Bot

Finally, here we are !. The Bot is ready to be tested. Load the trained model from the directory as shown below.

#Starting the Bot

from rasa_core.agent import Agent

agent = Agent.load('models/dialogue', interpreter=model_directory)

INFO:rasa_nlu.components:Added 'SpacyNLP' to component cache. Key 'SpacyNLP-en_core_web_md'.

We write a function that will take input message from the user until encountering “stop”. We pass the input message of user to the trained agent using the handle_message() function .It then returns a dictionary responses from which the text response is printed as shown below

print("Your bot is ready to talk! Type your messages here or send 'stop'")

while True:

a = input()

if a == 'stop':

break

responses = agent.handle_message(a)

for response in responses:

print(response["text"])

#> Your bot is ready to talk! Type your messages here or send 'stop'

#> hey

#> Hey! Welcome to Biker's cafe . How may I help you ?

#> place order

#> Yes, we are open 24 X 7. Here's our menu. 1. Chicken popcorn 2.White sauce pasta 3.Macroni 4.Lasagna 5.Pizza 6.Taco 7.Garlic breadsticks 8. coke (500 ml). Enter 1 if you want popcorn, 5 for Pizza and so on

3

#> Great choice ! Your order has been confirmed. Wait while our top chefs prepare your delicious food !

#> i am hungry

#> Your food is being prepared. It usually takes 25-30 minutes

bad

#. Sorry that you faced trouble. We have noted your issues and it shall not be repeated again

add more dishes

#> Sure , we shall take into account . Thanks for suggestion

#> bye

#> Thankyou for choosing us. Waiting for your next visit

#> stop

Yipeeee!

We have successfully built a bot for our cafe to handle orders and queries. I hope you understood how Rasa works and the necessary steps to be followed. If you find the performance unsatisfactory, try providing more amount of training examples. You can also try using alternate components and policies. Stay tuned for more such posts!