spaCy is an advanced modern library for Natural Language Processing developed by Matthew Honnibal and Ines Montani. This tutorial is a complete guide to learn how to use spaCy for various tasks.

Overview

1. Introduction

The Doc object

2. Tokenization with spaCy

3. Text-Preprocessing with spaCy

4. Lemmatization

5. Strings to Hashes

6. Lexical attributes of spaCy

7. Detecting Email Addresses

8. Part of Speech analysis with spaCy

9. How POS tagging helps you in dealing with text based problems.

10. Named Entity Recognition

11. NER Application 1: Extracting brand names with Named Entity Recognition

12. NER Application 2: Automatically Masking Entities

13. Rule based Matching

Token Matcher

Phrase Matcher

Entity Ruler

14. Word Vectors and similarity

15. Merging and Splitting Tokens with retokenize

16. spaCy pipelines

17. Methods for Efficient processing

18. Creating custom pipeline components

19. Related Posts

1. Introduction

spaCy is an advanced modern library for Natural Language Processing developed by Matthew Honnibal and Ines Montani. It is designed to be industrial grade but open source.

# !pip install -U spacy

import spacy

spaCy comes with pretrained NLP models that can perform most common NLP tasks, such as tokenization, parts of speech (POS) tagging, named entity recognition (NER), lemmatization, transforming to word vectors etc.

If you are dealing with a particular language, you can load the spacy model specific to the language using spacy.load() function.

# Load small english model: https://spacy.io/models

nlp=spacy.load("en_core_web_sm")

nlp

#> spacy.lang.en.English at 0x7fd40c2eec50

This returns a Language object that comes ready with multiple built-in capabilities.

It’s a pretty long list. Time to grab a cup of coffee!

The Doc object

Now, let us say you have your text data in a string. What can be done to understand the structure of the text?

First, call the loaded nlp object on the text. It should return a processed Doc object.

# Parse text through the `nlp` model

my_text = """The economic situation of the country is on edge , as the stock

market crashed causing loss of millions. Citizens who had their main investment

in the share-market are facing a great loss. Many companies might lay off

thousands of people to reduce labor cost"""

my_doc = nlp(my_text)

type(my_doc)

#> spacy.tokens.doc.Doc

The output is a Doc object.

But, what exactly is a Doc object ?

It is a sequence of tokens that contains not just the original text but all the results produced by the spaCy model after processing the text. Useful information such as the lemma of the text, whether it is a stop word or not, named entities, the word vector of the text and so on are pre-computed and readily stored in the Doc object.

The good thing is that you have complete control on what information needs to be pre-computed and customized. We will see all of that shortly.

Also, though the text gets split into tokens, no information of the original text is actually lost.

What is a Token?

Tokens are individual text entities that make up the text. Typically a token can be the words, punctuation, spaces, etc.

2. Tokenization with spaCy

What is Tokenization?

Tokenization is the process of converting a text into smaller sub-texts, based on certain predefined rules. For example, sentences are tokenized to words (and punctuation optionally). And paragraphs into sentences, depending on the context.

This is typically the first step for NLP tasks like text classification, sentiment analysis, etc.

Each token in spacy has different attributes that tell us a great deal of information.

Such as, if the token is a punctuation, what part-of-speech (POS) is it, what is the lemma of the word etc. This article will cover everything from A-Z.

Let’s see the token texts on my_doc. The string which the token represents can be accessed through the token.text attribute.

# Printing the tokens of a doc

for token in my_doc:

print(token.text)

The

economic

situation

of

the

country

is

on

edge

...(truncated)...

The above tokens contain punctuation and common words like “a”, ” the”, “was”, etc. These do not add any value to the meaning of your text. They are called stop words.

Let’s clean it up.

3. Text-Preprocessing with spaCy

As mentioned in the last section, there is ‘noise’ in the tokens. The words such as ‘the’, ‘was’, ‘it’ etc are very common and are referred as ‘stop words’.

Besides, you have punctuation like commas, brackets, full stop and some extra white spaces too. The process of removing noise from the doc is called Text Cleaning or Preprocessing.

What is the need for Text Preprocessing ?

The outcome of the NLP task you perform, be it classification, finding sentiments, topic modeling etc, the quality of the output depends heavily on the quality of the input text used.

Stop words and punctuation usually (not always) don’t add value to the meaning of the text and can potentially impact the outcome. To avoid this, its might make sense to remove them and clean the text of unwanted characters can reduce the size of the corpus.

How to identify and remove the stopwords and punctuation?

The tokens in spacy have attributes which will help you identify if it is a stop word or not.

The token.is_stop attribute tells you that. Likewise, token.is_punct and token.is_space tell you if a token is a punctuation and white space respectively.

# Printing tokens and boolean values stored in different attributes

for token in my_doc:

print(token.text,'--',token.is_stop,'---',token.is_punct)

The -- True --- False

economic -- False --- False

situation -- False --- False

of -- True --- False

the -- True --- False

country -- False --- False

is -- True --- False

on -- True --- False

edge -- False --- False

, -- False --- True

as -- True --- False

the -- True --- False

...(truncated)...

Using this information, let’s remove the stopwords and punctuations.

# Removing StopWords and punctuations

my_doc_cleaned = [token for token in my_doc if not token.is_stop and not token.is_punct]

for token in my_doc_cleaned:

print(token.text)

economic

situation

country

edge

stock

market

crashed

causing

loss

millions

...(truncated)...

You can now see that the cleaned doc has only tokens that contribute to meaning in some way.

Also , the computational costs decreases by a great amount due to reduce in the number of tokens. In order to grasp the effect of Preprocessing on large text data , you can excecute the below code

# Reading a huge text data on robotics into a spacy doc

robotics_data= """Robotics is an interdisciplinary research area at the interface of computer science and engineering. Robotics involvesdesign, construction, operation, and use of robots. The goal of robotics is to design intelligent machines that can help and assist humans in their day-to-day lives and keep everyone safe. Robotics draws on the achievement of information engineering, computer engineering, mechanical engineering, electronic engineering and others.Robotics develops machines that can substitute for humans and replicate human actions. Robots can be used in many situations and for lots of purposes, but today many are used in dangerous environments(including inspection of radioactive materials, bomb detection and deactivation), manufacturing processes, or where humans cannot survive (e.g. in space, underwater, in high heat, and clean up and containment of hazardousmaterials and radiation). Robots can take on any form but some are made to resemble humans in appearance. This is said to help in the acceptance of a robot in

certain replicative behaviors usually performed by people. Such robots attempt to replicate walking, lifting, speech, cognition, or any other human activity. Many of todays robots are inspired by nature, contributing to the field of bio-inspired

robotics.The concept of creating machines that can operate autonomously dates back to classical times, but research into the functionality and potential uses of robots did not grow substantially until the 20th century. Throughout history, it has been frequently assumed by various scholars, inventors, engineers, and technicians that robots will one day be able to mimic human behavior and manage tasks in a human-like fashion. Today, robotics is a rapidly growing field, as technological advances continue; researching, designing, and building new robots serve various practical purposes, whether domestically, commercially, or militarily. Many robots are built to do jobs that are hazardous to people, such as defusing bombs, finding survivors in unstable ruins, and exploring mines and shipwrecks. Robotics is also used in STEM (science, technology, engineering, and mathematics) as a teaching aid. The advent of nanorobots, microscopic robots that can be injected into the human body, could revolutionize medicine and human health.Robotics is a branch of engineering that involves the conception, design, manufacture, and operation of robots. This field overlaps with computer engineering, computer science (especially artificial intelligence), electronics, mechatronics, nanotechnology and bioengineering.The word robotics was derived from the word robot, which was introduced to the public by Czech writer Karel Capek in his play R.U.R. (Rossums Universal Robots), whichwas published in 1920. The word robot comes from the Slavic word robota, which means slave/servant. The play begins in a factory that makes artificial people called robots, creatures who can be mistaken for humans – very similar to the modern ideas of androids. Karel Capek himself did not coin the word. He wrote a short letter in reference to an etymology in the

Oxford English Dictionary in which he named his brother Josef Capek as its actual

originator.According to the Oxford English Dictionary, the word robotics was first

used in print by Isaac Asimov, in his science fiction short story "Liar!",

published in May 1941 in Astounding Science Fiction. Asimov was unaware that he

was coining the term since the science and technology of electrical devices is

electronics, he assumed robotics already referred to the science and technology

of robots. In some of Asimovs other works, he states that the first use of the

word robotics was in his short story Runaround (Astounding Science Fiction, March

1942) where he introduced his concept of The Three Laws of Robotics. However,

the original publication of "Liar!" predates that of "Runaround" by ten months,

so the former is generally cited as the words origin.There are many types of robots;

they are used in many different environments and for many different uses. Although

being very diverse in application and form, they all share three basic similarities

when it comes to their construction:Robots all have some kind of mechanical construction, a frame, form or shape designed to achieve a particular task. For example, a robot designed to travel across heavy dirt or mud, might use caterpillar tracks. The mechanical aspect is mostly the creators solution to completing the assigned task and dealing with the physics of the environment around it. Form follows function.Robots have electrical components which power and control the machinery. For example, the robot with caterpillar tracks would need some kind of power to move the tracker treads. That power comes in the form of electricity, which will have to travel through a wire and originate from a battery, a basic electrical circuit. Even petrol powered machines that get their power mainly from petrol still require an electric current to start the combustion process which is why most petrol powered machines like cars, have batteries. The electrical aspect of robots is used for movement (through motors), sensing (where electrical signals are used to measure things like heat, sound, position, and energy status) and operation (robots need some level of electrical energy supplied to their motors and sensors in order to activate and perform basic operations) All robots contain some level of computer programming code. A program is how a robot decides when or how to do something. In the caterpillar track example, a robot that needs to move across a muddy road may have the correct mechanical construction and receive the correct amount of power from its battery, but would not go anywhere without a program telling it to move. Programs are the core essence of a robot, it could have excellent mechanical and electrical construction, but if its program is poorly constructed its performance will be very poor (or it may not perform at all). There are three different types of robotic programs: remote control, artificial intelligence and hybrid. A robot with remote control programing has a preexisting set of commands that it will only perform if and when it receives a signal from a control source, typically a human being with a remote control. It is perhaps more appropriate to view devices controlled primarily by human commands as falling in the discipline of automation rather than robotics. Robots that use artificial intelligence interact with their environment on their own without a control source, and can determine reactions to objects and problems they encounter using their preexisting programming. Hybrid is a form of programming that incorporates both AI and RC functions.As more and more robots are designed for specific tasks this method of classification becomes more relevant. For example, many robots are designed for assembly work, which may not be readily adaptable for other applications. They are termed as "assembly robots". For seam welding, some suppliers provide complete welding systems with the robot i.e. the welding equipment along with other material handling facilities like turntables, etc. as an integrated unit. Such an integrated robotic system is called a "welding robot" even though its discrete manipulator unit could be adapted to a variety of tasks. Some robots are specifically designed for heavy load manipulation, and are labeled as "heavy-duty robots".one or two wheels. These can have certain advantages such as greater efficiency and reduced parts, as well as allowing a robot to navigate in confined places that a four-wheeled robot would not be able to.Two-wheeled balancing robots Balancing robots generally use a gyroscope to detect how much a robot is falling and then drive the wheels proportionally in the same direction, to counterbalance the fall at hundreds of times per second, based on the dynamics of an inverted pendulum.[71] Many different balancing robots have been designed.[72] While the Segway is not commonly thought of as a robot, it can be thought of as a component of a robot, when used as such Segway refer to them as RMP (Robotic Mobility Platform). An example of this use has been as NASA Robonaut that has been mounted on a Segway.One-wheeled balancing robots Main article: Self-balancing unicycle A one-wheeled balancing robot is an extension of a two-wheeled balancing robot so that it can move in any 2D direction using a round ball as its only wheel. Several one-wheeled balancing robots have been designed recently, such as Carnegie Mellon Universitys "Ballbot" that is the approximate height and width of a person, and Tohoku Gakuin University BallIP Because of the long, thin shape and ability to maneuver in tight spaces, they have the potential to function better than other robots in environments with people

"""

# Pass the Text to Model

robotics_doc = nlp(robotics_data)

print('Before PreProcessing n_Tokens: ', len(robotics_doc))

# Removing stopwords and punctuation from the doc.

robotics_doc=[token for token in robotics_doc if not token.is_stop and not token.is_punct]

print('After PreProcessing n_Tokens: ', len(robotics_doc))

#> Before PreProcessing n_Tokens: 1667

#> After PreProcessing n_Tokens: 782

More than half of the tokens are removed. Makes the processing faster and meaningful.

4. Lemmatization

Have a look at these words: “played”, “playing”, “plays”, “play”.

These words are not entirely unique, as they all basically refer to the root word: “play”. Very often, while trying to interpret the meaning of the text using NLP, you will be concerned about the root meaning and not the tense.

For algorithms that work based on the number of occurrences of the words, having multiple forms of the same word will reduce the number of counts for the root word, which is ‘play’ in this case.

Hence, counting “played” and “playing” as different tokens will not help.

Lemmatization is the method of converting a token to it’s root/base form.

Fortunately, spaCy provides a very easy and robust solution for this and is considered as one of the optimal implementations.

After you’ve formed the Document object (by using nlp()), you can access the root form of every token through Token.lemma_ attribute.

# Lemmatizing the tokens of a doc

text='she played chess against rita she likes playing chess.'

doc=nlp(text)

for token in doc:

print(token.lemma_)

#> -PRON-

#> play

#> chess

#> against

#> rita

#> -PRON-

#> like

#> play

#> chess

#> .

This method also prints ‘PRON’ when it encounters a pronoun as shown above. You might have to explicitly handle them.

5. Strings to Hashes

You are aware that whenever you create a doc , the words of the doc are stored in the Vocab.

Also, consider you have about 1000 text documents each having information about various clothing items of different brands. The chances are, the words “shirt” and “pants” are going to be very common. Each time the word “shirt” occurs , if spaCy were to store the exact string , you’ll end up losing huge memory space.

But this doesn’t happen. Why ?

spaCy hashes or converts each string to a unique ID that is stored in the StringStore.

But, what is StringStore?

It’s a dictionary mapping of hash values to strings, for example 10543432924755684266 –> box

You can print the hash value if you know the string and vice-versa. This is contained in nlp.vocab.strings as shown below.

# Strings to Hashes and Back

doc = nlp("I love traveling")

# Look up the hash for the word "traveling"

word_hash = nlp.vocab.strings["traveling"]

print(word_hash)

# Look up the word_hash to get the string

word_string = nlp.vocab.strings[word_hash]

print(word_string)

#> 5902765392174988614

#> traveling

Interestingly, a word will have the same hash value irrespective of which document it occurs in or which spaCy model is being used.

So your results are reproducible even if you run your code in some one else’s machine.

# Create two different doc with a common word

doc1 = nlp('Raymond shirts are famous')

doc2 = nlp('I washed my shirts ')

# Printing the hash value for each token in the doc

print('-------DOC 1-------')

for token in doc1:

hash_value=nlp.vocab.strings[token.text]

print(token.text ,' ',hash_value)

print('-------DOC 2-------')

for token in doc2:

hash_value=nlp.vocab.strings[token.text]

print(token.text ,' ',hash_value)

#> -------DOC 1-------

#> Raymond 5945540083247941101

#> shirts 9181315343169869855

#> are 5012629990875267006

#> famous 17809293829314912000

#> -------DOC 2-------

#> I 4690420944186131903

#> washed 5520327350569975027

#> my 227504873216781231

#> shirts 9181315343169869855

You can verify that ‘ shirts ‘ has the same hash value irrespective of which document it occurs in. This saves memory space.

6. Lexical attributes of spaCy

Recall that we used is_punct and is_space attributes in Text Preprocessing. They are called as ‘lexical attributes’.

In this section, you will learn about a few more significant lexical attributes.

The spaCy model provides many useful lexical attributes. These are the attributes of Token object, that give you information on the type of token.

For example, you can use like_num attribute of a token to check if it is a number. Let’s print all the numbers in a text.

# Printing the tokens which are like numbers

text=' 2020 is far worse than 2009'

doc=nlp(text)

for token in doc:

if token.like_num:

print(token)

#> 2020

#> 2009

Let us discuss some real-life applications of these features.

Say you have a text file about percentage production of medicine in various cities.

production_text=' Production in chennai is 87 %. In Kolkata, produce it as low as 43 %. In Bangalore, production ia as good as 98 %.In mysore, production is average around 78 %'

What if you just want to a list of various percentages in the text ?

You can convert the text into a Doc object of spaCy and check what tokens are numbers through like_num attribute . If it is a number, you can check if the next token is ” % “. You can access the index of next token through token.i + 1

# Finding the tokens which are numbers followed by %

production_doc=nlp(production_text)

for token in production_doc:

if token.like_num:

index_of_next_token=token.i+ 1

next_token=production_doc[index_of_next_token]

if next_token.text == '%':

print(token.text)

#> 87

#> 43

#> 98

#> 78

There are other useful attributes too. Let’s discuss more.

7. Detecting Email Addresses

Consider you have a text document about details of various employees.

What if you want all the emails of employees to send a common email ?

You can tokenize the document and check which tokens are emails through like_email attribute. like_email returns True if the token is a email

# text containing employee details

employee_text=""" name : Koushiki age: 45 email : [email protected]

name : Gayathri age: 34 email: [email protected]

name : Ardra age: 60 email : [email protected]

name : pratham parmar age: 15 email : [email protected]

name : Shashank age: 54 email: [email protected]

name : Utkarsh age: 46 email :[email protected]"""

# creating a spacy doc

employee_doc=nlp(employee_text)

# Printing the tokens which are email through `like_email` attribute

for token in employee_doc:

if token.like_email:

print(token.text)

#> [email protected]

#> [email protected]

#> [email protected]

#> [email protected]

#> [email protected]

#> [email protected]

Likewise, spaCy provides a variety of token attributes. Below is a list of those attributes and the function they perform

token.is_alpha: ReturnsTrueif the token is an alphabettoken.is_ascii: ReturnsTrueif the token belongs to ascii characterstoken.is_digit: ReturnsTrueif the token is a number(0-9)token.is_upper: ReturnsTrueif the token is upper case alphabettoken.is_lower: ReturnsTrueif the token is lower case alphabettoken.is_space: ReturnsTrueif the token is a space ‘ ‘token.is_bracket: ReturnsTrueif the token is a brackettoken.is_quote: ReturnsTrueif the token is a quotation marktoken.like_url: ReturnsTrueif the token is similar to a URl (link to website)

Apart from Lexical attributes, there are other attributes which throw light upon the tokens. You’ll see about them in next sections.

8. Part of Speech analysis with spaCy

Consider a sentence , “Emily likes playing football”.

Here , Emily is a NOUN , and playing is a VERB. Likewise , each word of a text is either a noun, pronoun, verb, conjection, etc. These tags are called as Part of Speech tags (POS).

How to identify the part of speech of the words in a text document ?

It is present in the pos_ attribute.

# POS tagging using spaCy

my_text='John plays basketball,if time permits. He played in high school too.'

my_doc=nlp(my_text)

for token in my_doc:

print(token.text,'---- ',token.pos_)

#> John ---- PROPN

#> plays ---- VERB

#> basketball ---- NOUN

#> , ---- PUNCT

#> if ---- SCONJ

#> time ---- NOUN

#> permits ---- VERB

#> . ---- PUNCT

#> He ---- PRON

#> played ---- VERB

#> in ---- ADP

#> high ---- ADJ

#> school ---- NOUN

#> too ---- ADV

#> . ---- PUNCT

From above output , you can see the POS tag against each word like VERB , ADJ, etc..

What if you don’t know what the tag SCONJ means ?

Using spacy.explain() function , you can know the explanation or full-form in this case.

spacy.explain('SCONJ')

'subordinating conjunction'

9. How POS tagging helps you in dealing with text based problems.

Consider you have a text document of reviews or comments on a post. Apart from genuine words, there will be certain junk like “etc” which do not mean anything. How can you remove them ?

Using spacy’s pos_ attribute, you can check if a particular token is junk through token.pos_ == 'X' and remove them. Below code demonstrates the same.

# Raw text document

raw_text="""I liked the movies etc The movie had good direction The movie was amazing i.e.

The movie was average direction was not bad The cinematography was nice. i.e.

The movie was a bit lengthy otherwise fantastic etc etc"""

# Creating a spacy object

raw_doc=nlp(raw_text)

# Checking if POS tag is X and printing them

print('The junk values are..')

for token in raw_doc:

if token.pos_=='X':

print(token.text)

print('After removing junk')

# Removing the tokens whose POS tag is junk.

clean_doc=[token for token in raw_doc if not token.pos_=='X']

print(clean_doc)

#> The junk values are..

#> etc

#> i.e.

#> i.e.

#> etc

#> etc

#> After removing junk

#> [I, liked, the, movies, The, movie, had, good, direction, , The, movie, was, amaing,

#> , The, movie, was, average, direction, was, not, bad, The, ciematography, was, nice, .,

#> , The, movie, was, a, bit, lengthy, , otherwise, fantastic, ]

You can also know what types of tokens are present in your text by creating a dictionary shown below.

# creating a dictionary with parts of speeach & corresponding token numbers.

all_tags = {token.pos: token.pos_ for token in raw_doc}

print(all_tags)

#> {95: 'PRON', 100: 'VERB', 90: 'DET', 92: 'NOUN', 101: 'X', 87: 'AUX', 84: 'ADJ', 103: 'SPACE', 94: 'PART', 97: 'PUNCT', 86: 'ADV'}

For better understanding of various POS of a sentence, you can use the visualization function displacy of spacy.

# Importing displacy

from spacy import displacy

my_text='She never like playing , reading was her hobby'

my_doc=nlp(my_text)

# displaying tokens with their POS tags

displacy.render(my_doc,style='dep',jupyter=True)

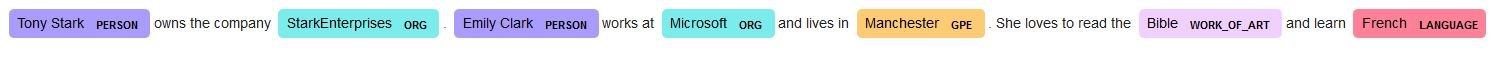

10. Named Entity Recognition

Have a look at this text “John works at Google1″. In this, ” John ” and ” Google ” are names of a person and a company. These words are referred as named-entities. They are real-world objects like name of a company , place,etc..

How can find all the named-entities in a text ?

Using spaCy’s ents attribute on a document, you can access all the named-entities present in the text.

# Preparing the spaCy document

text='Tony Stark owns the company StarkEnterprises . Emily Clark works at Microsoft and lives in Manchester. She loves to read the Bible and learn French'

doc=nlp(text)

# Printing the named entities

print(doc.ents)

#> (Tony Stark, StarkEnterprises, Emily Clark, Microsoft, Manchester, Bible, French)

You can see all the named entities printed.

But , is this complete information ? NO.

Each named entity belongs to a category, like name of a person, or an organization, or a city, etc. The common Named Entity categories supported by spacy are :

PERSON: Denotes names of peopleGPE: Denotes places like counties, cities, states.ORG: Denotes organizations or companiesWORK_OF_ART: Denotes titles of books, fimls,songs and other artsPRODUCT: Denotes products such as vehicles, food items ,furniture and so on.EVENT: Denotes historical events like wars, disasters ,etc…LANGUAGE: All the recognized languages across the globe.

How can you find out which named entity category does a given text belong to?

You can access the same through .label_ attribute of spacy. It prints the label of named entities as shown below.

# Printing labels of entities.

for entity in doc.ents:

print(entity.text,'--- ',entity.label_)

#> Tony Stark --- PERSON

#> StarkEnterprises --- ORG

#> Emily Clark --- PERSON

#> Microsoft --- ORG

#> Manchester --- GPE

#> Bible --- WORK_OF_ART

#> French --- LANGUAGE

spaCy also provides special visualization for NER through displacy. Using displacy.render() function, you can set the style=ent to visualize.

# Using displacy for visualizing NER

from spacy import displacy

displacy.render(doc,style='ent',jupyter=True)

11. NER Application 1: Extracting brand names with Named Entity Recognition

Now that you have got a grasp on basic terms and process, let’s move on to see how named entity recognition is useful for us.

Consider this article about competition in the mobile industry.

mobile_industry_article=""" 30 Major mobile phone brands Compete in India – A Case Study of Success and Failures

Is the Indian mobile market a terrible War Zone? We have more than 30 brands competing with each other. Let’s find out some insights about the world second-largest mobile bazaar.There is a massive invasion by Chinese mobile brands in India in the last four years. Some of the brands have been able to make a mark while others like Meizu, Coolpad, ZTE, and LeEco are a failure.On one side, there are brands like Sony or HTC that have quit from the Indian market on the other side we have new brands like Realme or iQOO entering the marketing in recent months.The mobile market is so competitive that some of the brands like Micromax, which had over 18% share back in 2014, now have less than 5%. Even the market leader Samsung with a 34% market share in 2014, now has a 21% share whereas Xiaomi has become a market leader. The battle is fierce and to sustain and scale-up is going to be very difficult for any new entrant.new comers in Indian Mobile MarketiQOO –They have recently (March 2020) launched the iQOO 3 in India with its first 5G phone – iQOO 3. The new brand is part of the Vivo or the BBK electronics group that also owns several other brands like Oppo, Oneplus and Realme.Realme – Realme launched the first-ever phone – Realme 1 in November 2018 and has quickly became a popular brand in India. The brand is one of the highest sellers in online space and even reached a 16% market share threatening Xiaomi’s dominance.iVoomi – In 2017, we have seen the entry of some new Chinese mobile brands likeiVoomi which focuses on the sub 10k price range, and is a popular online player. They have an association with Flipkart.Techno & Infinix – Transsion Group’s Tecno and Infinix brands debuted in India in mid-2017 and are focusing on the low end and mid-range phones in the price range of Rs. 5000 to Rs. 12000.10.OR & Lephone – 10.OR has a partnership with Amazon India and is an exclusive online brand with phones like 10.OR D, G and E. However, the brand is not very aggressive currently.Kult – Kult is another player who launched a very aggressively priced Kult Beyond mobile in 2017 and followed up by launching 2-3 more models.However, most of these new brands are finding it difficult to strengthen their footing in India. As big brands like Xiaomi leave no stone unturned to make things difficult.Also, it is worth noting that there is less Chinese players coming to India now. As either all the big brands have already set shop or burnt their hands and retreated to the homeland China.Chinese/ Global Brands Which failed or are at the Verge of Failing in India?

There are a lot more failures in the market than the success stories. Let’s first look at the failures and then we will also discuss why some brands were able to succeed in India.HTC – The biggest surprise this year for me was the failure of HTC in India. The brand has been in the country for many years, in fact, they were the first brand to launch Android mobiles. Finally HTC decided to call it a day in July 2018.LeEco – LeEco looked promising and even threatening to Xiaomi when it came to India. The company launched a series of new phones and smart TVs at affordable rates. Unfortunately, poor financial planning back home caused the brand to fail in India too.LG – The company seems to have lost focus and are doing poorly in all segments. While the budget and mid-range offering are uncompetitive, the high-end models are not preferred by buyers.Sony – Absurd pricing and lack of ability to understand the Indian buyers have caused Sony to shrink mobile operations in India. In the last 2 years, there are far fewer launches and hardly any promotions or hype around the new products.Meizu – Meizu is also a struggling brand in India and is going nowhere with the current strategy. There are hardly any popular mobiles nor a retail presence.ZTE – The company was aggressive till last year with several new phones launching under the Nubia banner, but with recent issues in the US, they have even lost the plot in India.Coolpad – I still remember the first meeting with Coolpad CEO in Mumbai when the brand started operations. There were big dreams and ambitions, but the company has not been able to deliver and keep up with the rivals in the last 1 year.Gionee – Gionee was doing well in the retail, but the infighting in the company and loss of focus from the Chinese parent company has made it a failure. The company is planning a comeback. However, we will have to wait and see when that happens."""

What if you want to know all the companies that are mentioned in this article?

This is where Named Entity Recognition helps. You can check which tokens are organizations using label_ attribute as shown in below code.

# creating spacy doc

mobile_doc=nlp(mobile_industry_article)

# List to store name of mobile companies

list_of_org=[]

# Appending entities which havel the label 'ORG' to the list

for entity in mobile_doc.ents:

if entity.label_=='ORG':

list_of_org.append(entity.text)

print(list_of_org)

#> ['Meizu', 'ZTE', 'LeEco', 'Sony', 'HTC', 'Xiaomi', 'Xiaomi', 'iVoomi', 'Techno & Infinix – Transsion Group',

#> Lephone', 'Amazon India', 'Kult', 'Kult', 'Kult Beyond', 'HTC', 'Android', 'Sony', 'Sony', 'Meizu', 'Meizu', 'ZTE', 'Nubia']

You have successfully extracted list of companies that were mentioned in the article.

12. NER Application 2: Automatically Masking Entities

Let us also discuss another application. You come across many articles about theft and other crimes.

# Creating a doc on news articles

news_text="""Indian man has allegedly duped nearly 50 businessmen in the UAE of USD 1.6 million and fled the country in the most unlikely way -- on a repatriation flight to Hyderabad, according to a media report on Saturday.Yogesh Ashok Yariava, the prime accused in the fraud, flew from Abu Dhabi to Hyderabad on a Vande Bharat repatriation flight on May 11 with around 170 evacuees, the Gulf News reported.Yariava, the 36-year-old owner of the fraudulent Royal Luck Foodstuff Trading, made bulk purchases worth 6 million dirhams (USD 1.6 million) against post-dated cheques from unsuspecting traders before fleeing to India, the daily said.

The bought goods included facemasks, hand sanitisers, medical gloves (worth nearly 5,00,000 dirhams), rice and nuts (3,93,000 dirhams), tuna, pistachios and saffron (3,00,725 dirhams), French fries and mozzarella cheese (2,29,000 dirhams), frozen Indian beef (2,07,000 dirhams) and halwa and tahina (52,812 dirhams).

The list of items and defrauded persons keeps getting longer as more and more victims come forward, the report said.

The aggrieved traders have filed a case with the Bur Dubai police station.

The traders said when the dud cheques started bouncing they rushed to the Royal Luck's office in Dubai but the shutters were down, even the fraudulent company's warehouses were empty."""

news_doc=nlp(news_text)

While using this for a case study, you might need to to avoid use of original names, companies and places. How can you do it ?

Write a function which will scan the text for named entities which have the labels PERSON , ORG and GPE. These tokens can be replaced by “UNKNOWN”.

I suggest to try it out in your Jupyter notebook if you have access. The answer is below.

# Function to identify if tokens are named entities and replace them with UNKNOWN

def remove_details(word):

if word.ent_type_ =='PERSON' or word.ent_type_=='ORG' or word.ent_type_=='GPE':

return ' UNKNOWN '

return word.string

# Function where each token of spacy doc is passed through remove_deatils()

def update_article(doc):

# iterrating through all entities

for ent in doc.ents:

ent.merge()

# Passing each token through remove_details() function.

tokens = map(remove_details,doc)

return ''.join(tokens)

# Passing our news_doc to the function update_article()

update_article(news_doc)

#> "Indian man has allegedly duped nearly 50 businessmen in the UNKNOWN of USD 1.6 million and fled the country in the most unlikely way -- on a repatriation flight to UNKNOWN , according to a media report on Saturday.

#> UNKNOWN , the prime accused in the fraud, flew from UNKNOWN to UNKNOWN on a Vande Bharat repatriation flight on May 11 with around 170 evacuees, UNKNOWN reported.

#> UNKNOWN , the 36-year-old owner of the fraudulent UNKNOWN , made bulk purchases worth 6 million dirhams (USD 1.6 million) against post-dated cheques from unsuspecting traders before fleeing to UNKNOWN , the daily said.\n\nThe bought goods included facemasks, hand sanitisers, medical gloves (worth nearly 5,00,000 dirhams), rice and nuts (3,93,000 dirhams), tuna, pistachios and saffron (3,00,725 dirhams), French fries and mozzarella cheese (2,29,000 dirhams), frozen Indian beef (2,07,000 dirhams) and halwa and UNKNOWN (52,812 dirhams).\n\nThe list of items and defrauded persons keeps getting longer as more and more victims come forward, the report said.\n\nThe aggrieved traders have filed a case with the Bur Dubai police station.\n\nThe traders said when the UNKNOWN cheques started bouncing they rushed to UNKNOWN office in UNKNOWN but the shutters were down, even the fraudulent company's warehouses were empty."

You can observe that the article has been updated and many names have been hidden now. These are few applications of NER in reality.

13. Rule based Matching

Consider the sentence “Windows 8.0 has become outdated and slow. It’s better to update to Windows 10”. What if you want to extracts all versions of Windows mentioned in the text ?

There will be situations like these, where you’ll need extract specific pattern type phrases from the text. This is called Rule-based matching.

Rule-based matching in spacy allows you write your own rules to find or extract words and phrases in a text. spacy supports three kinds of matching methods :

- Token Matcher

- Phrase Matcher

- Entity Ruler

Token Matcher

spaCy supports a rule based matching engine Matcher, which operates over individual tokens to find desired phrases.

You can import spaCy’s Rule based Matcher as shown below.

from spacy.matcher import Matcher

The procedure to implement a token matcher is:

- Initialize a

Matcherobject - Define the pattern you want to match

- Add the pattern to the matcher

- Pass the text to the matcher to extract the matching positions.

Let’s see how to implement the above steps.

Token Matcher Example 1

First step: Initialize the Matcher with the vocabulary of your spacy model nlp

# Initializing the matcher with vocab

matcher = Matcher(nlp.vocab)

matcher

<spacy.matcher.matcher.Matcher at 0x7ff4e3a943c8>

You have store what type of pattern you desire in a list of dictionaries. Each dictionary represents a token. The rules for the token can refer to annotations Ex: ISDIGIT , ISALPHA , token.text , token.pos_,etc..

Let’s see how to create the pattern for identifying phrases like ” version : 11″ , ” version : 5 ” and so on.

First, create a list of dictionaries that represents the pattern you want to capture.

# Define the matching pattern

my_pattern=[{"LOWER": "version"}, {"IS_PUNCT": True}, {"LIKE_NUM": True}]

Now , you can add the pattern to your Matcher through matcher.add() function.

The input parameters are:

match_id– a custom id for your matcher . In this case I use ” Versionfinder”match_on– It is an optional parameter, where you can call functions when a match is found. Otherwise, useNone*patterns– You need to pass your pattern (list of dicts describing tokens)

# Define the token matcher

matcher.add('VersionFinder', None, my_pattern)

You can now use matcher on your text document.

# Run the Token Matcher

my_text = 'The version : 6 of the app was released about a year back and was not very sucessful. As a comeback, six months ago, version : 7 was released and it took the stage. After that , the app has has the limelight till now. On interviewing some sources, we get to know that they have outlined visiond till version : 12 ,the Ultimate.'

my_doc = nlp(my_text)

desired_matches = matcher(my_doc)

desired_matches

#> [(6950581368505071052, 2, 5),

#> (6950581368505071052, 28, 31),

#> (6950581368505071052, 66, 69)]

Passing the Doc to matcher() returns a list of tuples as shown above. Each tuple has the structure –(match_id, start, end).

match_id denotes the hash value of the matching string.You can find the string corresponding to the ID in nlp.vocab.strings. The start and end denote the starting and ending token numbers of the document, which is a match.

How to extract the phrases that matches from this list of tuples ?

A slice of a Doc object is referred as Span. If you have your spacy doc , and start and end indices, you extract a slice / span of the text through :Span=doc[start:end].

Below code makes use of this to extract matching phrases with the help of list of tuples desired_matches.

# Extract the matches

for match_id, start, end in desired_matches :

string_id = nlp.vocab.strings[match_id]

span = my_doc[start:end]

print(span.text)

#> version : 6

#> version : 7

#> version : 12

Above code has successfully performed rule-based matching and printed all the versions mentioned in the text.

This is how rule based matching works. Let’s dive deeper and look at a few more implementations !

Token Matcher Example 2

Consider a text document containing queries on a travel website. You wish to extract phrases from the text that mention visiting various places.

# Parse text

text = """I visited Manali last time. Around same budget trips ? "

I was visiting Ladakh this summer "

I have planned visiting NewYork and other abroad places for next year"

Have you ever visited Kodaikanal? """

doc = nlp(text)

Your desired pattern is a combination of 2 tokens. The first token is text “visiting ” or other related words.You can use the LEMMA attribute for the same.The second desired token is the place/location. You can set POS tag to be “PROPN” for this token.

The below code demonstrates how to write and add this pattern to the matcher

# Initialize the matcher

matcher = Matcher(nlp.vocab)

# Write a pattern that matches a form of "visit" + place

my_pattern = [{"LEMMA": "visit"}, {"POS": "PROPN"}]

# Add the pattern to the matcher and apply the matcher to the doc

matcher.add("Visting_places", None,my_pattern)

matches = matcher(doc)

# Counting the no of matches

print(" matches found:", len(matches))

# Iterate over the matches and print the span text

for match_id, start, end in matches:

print("Match found:", doc[start:end].text)

#>matches found: 4

#> Match found: visited Manali

#> Match found: visiting Ladakh

#> Match found: visiting NewYork

#> Match found: visited Kodaikanal

The above output is just as desired.

Token Matcher Example 3

Let’s see a slightly involved example.

Sometimes, you may have the need to choose tokens which fall under a few POS categories. Let us consider one more example of this case.

# Parse text

engineering_text = """If you study aeronautical engineering, you could specialize in aerodynamics, aeroelasticity,

composites analysis, avionics, propulsion and structures and materials. If you choose to study chemical engineering, you may like to

specialize in chemical reaction engineering, plant design, process engineering, process design or transport phenomena. Civil engineering is the professional practice of designing and developing infrastructure projects. This can be on a huge scale, such as the development of

nationwide transport systems or water supply networks, or on a smaller scale, such as the development of single roads or buildings.

specializations of civil engineering include structural engineering, architectural engineering, transportation engineering, geotechnical engineering,

environmental engineering and hydraulic engineering. Computer engineering concerns the design and prototyping of computing hardware and software.

This subject merges electrical engineering with computer science, oldest and broadest types of engineering, mechanical engineering is concerned with the design,

manufacturing and maintenance of mechanical systems. You’ll study statics and dynamics, thermodynamics, fluid dynamics, stress analysis, mechanical design and

technical drawing"""

doc = nlp(engineering_text)

Above, you have a text document about different career choices.

Let’s say you wish to extract a list of all the engineering courses mentioned in it. The desired pattern : _ Engineering. The first token is usually a NOUN (eg: computer, civil), but sometimes it is an ADJ (eg: transportation, etc.)

So, you need to write a pattern with the condition that first token has POS tag either a NOUN or an ADJ.

How to do that ?

The attribute IN helps you in this. You can use {"POS": {"IN": ["NOUN", "ADJ"]}} dictionary to represent the first token.

# Initializing the matcher

matcher = Matcher(nlp.vocab)

# Write a pattern that matches a form of "noun/adjective"+"engineering"

my_pattern = [{"POS": {"IN": ["NOUN", "ADJ"]}}, {"LOWER": "engineering"}]

# Add the pattern to the matcher and apply the matcher to the doc

matcher.add("identify_courses", None,my_pattern)

matches = matcher(doc)

print("Total matches found:", len(matches))

# Iterate over the matches and print the matching text

for match_id, start, end in matches:

print("Match found:", doc[start:end].text)

Total matches found: 15

Match found: aeronautical engineering

Match found: chemical engineering

Match found: reaction engineering

Match found: process engineering

Match found: Civil engineering

Match found: civil engineering

Match found: structural engineering

Match found: architectural engineering

Match found: transportation engineering

Match found: geotechnical engineering

Match found: environmental engineering

Match found: hydraulic engineering

Match found: Computer engineering

Match found: electrical engineering

Match found: mechanical engineering

You have neatly extracted the desired phrases with the Token matcher.

Note that IN used in above code is an extended pattern attribute along with NOT_IN. It serves the exact opposite purpose of IN.

This is all about Token Matcher, let’s look at the Phrase Matcher next.

Phrase Matcher

Using Matcher of spacy you can identify token patterns as seen above. But when you have a phrase to be matched, using Matcher will take a lot of time and is not efficient.

spaCy provides PhraseMatcher which can be used when you have a large number of terms(single or multi-tokens) to be matched in a text document. Writing patterns for Matcher is very difficult in this case. PhraseMatcher solves this problem, as you can pass Doc patterns rather than Token patterns.

The procedure to use PhraseMatcher is very similar to Matcher.

- Initialize a

PhraseMatcherobject with a vocab. - Define the terms you want to match

- Add the pattern to the matcher

- Run the text through the matcher to extract the matching positions.

from spacy.matcher import PhraseMatcher

After importing , first you need to initialize the PhraseMatcher with vocab through below command

# PhraseMatcher

matcher = PhraseMatcher(nlp.vocab)

As we use it generally in case of long list of terms, it’s better to first store the terms in a list as shown below

# Terms to match

terms_list = ['Bruce Wayne', 'Tony Stark', 'Batman', 'Harry Potter', 'Severus Snape']

You can convert the list of phrases into a doc object through make_doc() method. It is faster and saves time.

# Make a list of docs

patterns = [nlp.make_doc(text) for text in terms_list]

You can add the pattern to your matcher through matcher.add() method.

The inputs for the function are – A custom ID for your matcher, optional parameter for callable function, pattern list.

matcher.add("phrase_matcher", None, *patterns)

Now you can apply your matcher to your spacy text document. Below, you have a text article on prominent fictional characters and their creators.

# Matcher Object

fictional_char_doc = nlp("""Superman (first appearance: 1938) Created by Jerry Siegal and Joe Shuster for Action Comics #1 (DC Comics).Mickey Mouse (1928) Created by Walt Disney and Ub Iworks for Steamboat Willie.Bugs Bunny (1940) Created by Warner Bros and originally voiced by Mel Blanc.Batman (1939) Created by Bill Finger and Bob Kane for Detective Comics #27 (DC Comics).

Dorothy Gale (1900) Created by L. Frank Baum for novel The Wonderful Wizard of Oz. Later portrayed by Judy Garland in the 1939 film adaptation.Darth Vader (1977) Created by George Lucas for Star Wars IV: A New Hope.The Tramp (1914) Created and portrayed by Charlie Chaplin for Kid Auto Races at Venice.Peter Pan (1902) Created by J.M. Barrie for novel The Little White Bird.

Indiana Jones (1981) Created by George Lucas for Raiders of the Lost Ark. Portrayed by Harrison Ford.Rocky Balboa (1976) Created and portrayed by Sylvester Stallone for Rocky.Vito Corleone (1969) Created by Mario Puzo for novel The Godfather. Later portrayed by Marlon Brando and Robert DeNiro in Coppola’s film adaptation.Han Solo (1977) Created by George Lucas for Star Wars IV: A New Hope.

Portrayed most famously by Harrison Ford.Homer Simpson (1987) Created by Matt Groening for The Tracey Ullman Show, later The Simpsons as voiced by Dan Castellaneta.Archie Bunker (1971) Created by Norman Lear for All in the Family. Portrayed by Carroll O’Connor.Norman Bates (1959) Created by Robert Bloch for novel Psycho. Later portrayed by Anthony Perkins in Hitchcock’s film adaptation.King Kong (1933)

Created by Edgar Wallace and Merian C Cooper for the film King Kong.Lucy Ricardo (1951) Portrayed by Lucille Ball for I Love Lucy.Spiderman (1962) Created by Stan Lee and Steve Ditko for Amazing Fantasy #15 (Marvel Comics).Barbie (1959) Created by Ruth Handler for the toy company Mattel Spock (1964) Created by Gene Roddenberry for Star Trek. Portrayed most famously by Leonard Nimoy.

Godzilla (1954) Created by Tomoyuki Tanaka, Ishiro Honda, and Eiji Tsubaraya for the film Godzilla.The Joker (1940) Created by Jerry Robinson, Bill Finger, and Bob Kane for Batman #1 (DC Comics)Winnie-the-Pooh (1924) Created by A.A. Milne for verse book When We Were Young.Popeye (1929) Created by E.C. Segar for comic strip Thimble Theater (King Features).Tarzan (1912) Created by Edgar Rice Burroughs for the novel Tarzan of the Apes.Forrest Gump (1986) Created by Winston Groom for novel Forrest Gump. Later portrayed by Tom Hanks in Zemeckis’ film adaptation.Hannibal Lector (1981) Created by Thomas Harris for the novel Red Dragon. Portrayed most famously by Anthony Hopkins in the 1991 Jonathan Demme film The Silence of the Lambs.

Big Bird (1969) Created by Jim Henson and portrayed by Carroll Spinney for Sesame Street.Holden Caulfield (1945) Created by J.D. Salinger for the Collier’s story “I’m Crazy.” Reworked into the novel The Catcher in the Rye in 1951.Tony Montana (1983) Created by Oliver Stone for film Scarface. Portrayed by Al Pacino.Tony Soprano (1999) Created by David Chase for The Sopranos. Portrayed by James Gandolfini.

The Terminator (1984) Created by James Cameron and Gale Anne Hurd for The Terminator. Portrayed by Arnold Schwarzenegger.Jon Snow (1996) Created by George RR Martin for the novel The Game of Thrones. Portrayed by Kit Harrington.Charles Foster Kane (1941) Created and portrayed by Orson Welles for Citizen Kane.Scarlett O’Hara (1936) Created by Margaret Mitchell for the novel Gone With the Wind. Portrayed most famously by Vivien Leigh

for the 1939 Victor Fleming film adaptation.Marty McFly (1985) Created by Robert Zemeckis and Bob Gale for Back to the Future. Portrayed by Michael J. Fox.Rick Blaine (1940) Created by Murray Burnett and Joan Alison for the unproduced stage play Everybody Comes to Rick’s. Later portrayed by Humphrey Bogart in Michael Curtiz’s film adaptation Casablanca.Man With No Name (1964) Created by Sergio Leone for A Fistful of Dollars, which was adapted from a ronin character in Kurosawa’s Yojimbo (1961). Portrayed by Clint Eastwood.Charlie Brown (1948) Created by Charles M. Shultz for the comic strip L’il Folks; popularized two years later in Peanuts.E.T. (1982) Created by Melissa Mathison for the film E.T.: the Extra-Terrestrial.Arthur Fonzarelli (1974) Created by Bob Brunner for the show Happy Days. Portrayed by Henry Winkler.)Phillip Marlowe (1939) Created by Raymond Chandler for the novel The Big Sleep.Jay Gatsby (1925) Created by F. Scott Fitzgerald for the novel The Great Gatsby.Lassie (1938) Created by Eric Knight for a Saturday Evening Post story, later turned into the novel Lassie Come-Home in 1940, film adaptation in 1943, and long-running television show in 1954. Most famously portrayed by the dog Pal.

Fred Flintstone (1959) Created by William Hanna and Joseph Barbera for The Flintstones. Voiced most notably by Alan Reed. Rooster Cogburn (1968) Created by Charles Portis for the novel True Grit. Most famously portrayed by John Wayne in the 1969 film adaptation. Atticus Finch (1960) Created by Harper Lee for the novel To Kill a Mockingbird. (Appeared in the earlier work Go Set A Watchman, though this was not published until 2015) Portrayed most famously by Gregory Peck in the Robert Mulligan film adaptation. Kermit the Frog (1955) Created and performed by Jim Henson for the show Sam and Friends. Later popularized in Sesame Street (1969) and The Muppet Show (1976) George Bailey (1943) Created by Phillip Van Doren Stern (then as George Pratt) for the short story The Greatest Gift. Later adapted into Capra’s It’s A Wonderful Life, starring James Stewart as the renamed George Bailey. Yoda (1980) Created by George Lucas for The Empire Strikes Back. Sam Malone (1982) Created by Glen and Les Charles for the show Cheers. Portrayed by Ted Danson. Zorro (1919) Created by Johnston McCulley for the All-Story Weekly pulp magazine story The Curse of Capistrano.Later adapted to the Douglas Fairbanks’ film The Mark of Zorro (1920).Moe, Larry, and Curly (1928) Created by Ted Healy for the vaudeville act Ted Healy and his Stooges. Mary Poppins (1934) Created by P.L. Travers for the children’s book Mary Poppins. Ron Burgundy (2004) Created by Will Ferrell and Adam McKay for the film Anchorman: The Legend of Ron Burgundy. Portrayed by Will Ferrell. Mario (1981) Created by Shigeru Miyamoto for the video game Donkey Kong. Harry Potter (1997) Created by J.K. Rowling for the novel Harry Potter and the Philosopher’s Stone. The Dude (1998) Created by Ethan and Joel Coen for the film The Big Lebowski. Portrayed by Jeff Bridges.

Gandalf (1937) Created by J.R.R. Tolkien for the novel The Hobbit. The Grinch (1957) Created by Dr. Seuss for the story How the Grinch Stole Christmas! Willy Wonka (1964) Created by Roald Dahl for the children’s novel Charlie and the Chocolate Factory. The Hulk (1962) Created by Stan Lee and Jack Kirby for The Incredible Hulk #1 (Marvel Comics) Scooby-Doo (1969) Created by Joe Ruby and Ken Spears for the show Scooby-Doo, Where Are You! George Costanza (1989) Created by Larry David and Jerry Seinfeld for the show Seinfeld. Portrayed by Jason Alexander.Jules Winfield (1994) Created by Quentin Tarantino for the film Pulp Fiction. Portrayed by Samuel L. Jackson. John McClane (1988) Based on the character Detective Joe Leland, who was created by Roderick Thorp for the novel Nothing Lasts Forever. Later adapted into the John McTernan film Die Hard, starring Bruce Willis as McClane. Ellen Ripley (1979) Created by Don O’cannon and Ronald Shusett for the film Alien. Portrayed by Sigourney Weaver. Ralph Kramden (1951) Created and portrayed by Jackie Gleason for “The Honeymooners,” which became its own show in 1955.Edward Scissorhands (1990) Created by Tim Burton for the film Edward Scissorhands. Portrayed by Johnny Depp.Eric Cartman (1992) Created by Trey Parker and Matt Stone for the animated short Jesus vs Frosty. Later developed into the show South Park, which premiered in 1997. Voiced by Trey Parker.

Walter White (2008) Created by Vince Gilligan for Breaking Bad. Portrayed by Bryan Cranston. Cosmo Kramer (1989) Created by Larry David and Jerry Seinfeld for Seinfeld. Portrayed by Michael Richards.Pikachu (1996) Created by Atsuko Nishida and Ken Sugimori for the Pokemon video game and anime franchise.Michael Scott (2005) Based on a character from the British series The Office, created by Ricky Gervais and Steven Merchant. Portrayed by Steve Carell.Freddy Krueger (1984) Created by Wes Craven for the film A Nightmare on Elm Street. Most famously portrayed by Robert Englund.

Captain America (1941) Created by Joe Simon and Jack Kirby for Captain America Comics #1 (Marvel Comics)Goku (1984) Created by Akira Toriyama for the manga series Dragon Ball Z.Bambi (1923) Created by Felix Salten for the children’s book Bambi, a Life in the Woods. Later adapted into the Disney film Bambi in 1942.Ronald McDonald (1963) Created by Williard Scott for a series of television spots.Waldo/Wally (1987) Created by Martin Hanford for the children’s book Where’s Wally? (Waldo in US edition) Frasier Crane (1984) Created by Glen and Les Charles for Cheers. Portrayed by Kelsey Grammar.Omar Little (2002) Created by David Simon for The Wire.Portrayed by Michael K. Williams.

Wolverine (1974) Created by Roy Thomas, Len Wein, and John Romita Sr for The Incredible Hulk #180 (Marvel Comics) Jason Voorhees (1980) Created by Victor Miller for the film Friday the 13th. Betty Boop (1930) Created by Max Fleischer and the Grim Network for the cartoon Dizzy Dishes. Bilbo Baggins (1937) Created by J.R.R. Tolkien for the novel The Hobbit. Tom Joad (1939) Created by John Steinbeck for the novel The Grapes of Wrath. Later adapted into the 1940 John Ford film and portrayed by Henry Fonda.Tony Stark (Iron Man) (1963) Created by Stan Lee, Larry Lieber, Don Heck and Jack Kirby for Tales of Suspense #39 (Marvel Comics)Porky Pig (1935) Created by Friz Freleng for the animated short film I Haven’t Got a Hat. Voiced most famously by Mel Blanc.Travis Bickle (1976) Created by Paul Schrader for the film Taxi Driver. Portrayed by Robert De Niro.

Hawkeye Pierce (1968) Created by Richard Hooker for the novel MASH: A Novel About Three Army Doctors. Famously portrayed by both Alan Alda and Donald Sutherland. Don Draper (2007) Created by Matthew Weiner for the show Mad Men. Portrayed by Jon Hamm. Cliff Huxtable (1984) Created and portrayed by Bill Cosby for The Cosby Show. Jack Torrance (1977) Created by Stephen King for the novel The Shining. Later adapted into the 1980 Stanley Kubrick film and portrayed by Jack Nicholson. Holly Golightly (1958) Created by Truman Capote for the novella Breakfast at Tiffany’s. Later adapted into the 1961 Blake Edwards films starring Audrey Hepburn as Holly. Shrek (1990) Created by William Steig for the children’s book Shrek! Later adapted into the 2001 film starring Mike Myers as the titular character. Optimus Prime (1984) Created by Dennis O’Neil for the Transformers toy line.Sonic the Hedgehog (1991) Created by Naoto Ohshima and Yuji Uekawa for the Sega Genesis game of the same name.Harry Callahan (1971) Created by Harry Julian Fink and R.M. Fink for the movie Dirty Harry. Portrayed by Clint Eastwood.Bubble: Hercule Poirot, Tyrion Lannister, Ron Swanson, Cercei Lannister, J.R. Ewing, Tyler Durden, Spongebob Squarepants, The Genie from Aladdin, Pac-Man, Axel Foley, Terry Malloy, Patrick Bateman

Pre-20th Century: Santa Claus, Dracula, Robin Hood, Cinderella, Huckleberry Finn, Odysseus, Sherlock Holmes, Romeo and Juliet, Frankenstein, Prince Hamlet, Uncle Sam, Paul Bunyan, Tom Sawyer, Pinocchio, Oliver Twist, Snow White, Don Quixote, Rip Van Winkle, Ebenezer Scrooge, Anna Karenina, Ichabod Crane, John Henry, The Tooth Fairy,

Br’er Rabbit, Long John Silver, The Mad Hatter, Quasimodo """)

character_matches = matcher(fictional_char_doc)

The PhraseMatcher returns a list of (match_id, start, end) tuples, describing the matches. A match tuple describes a span doc[start:end].

The match_id refers to the string ID of the match pattern.

# Matching positions

character_matches

#> [(520014689628841516, 1366, 1368),

#> (520014689628841516, 1379, 1381),

#> (520014689628841516, 2113, 2115)]

You can see that 3 of the terms have been found in the text, but we dont know what they are. For that , you need to extract the Span using start and end as shown below.

# Matched items

for match_id, start, end in character_matches:

span = fictional_char_doc[start:end]

print(span.text)

#> Batman

#> Batman

#> Harry Potter

#> Harry Potter

#> Tony Stark

You can see that ‘Harry Potter’ and ‘Batman’ were mentioned twice ,

‘Tony Stark’ once, but the other terms didn’t match.

Another useful feature of PhraseMatcher is that while intializing the matcher, you have an option to use the parameter attr, using which you can set rules for how the matching has to happen.

How to use attr?

Setting a attr to match on will change the token attributes that will be compared to determine a match. For example, if you use attr='LOWER', then case-insensitive matching will happen.

For understanding, I shall demonstrate it in the below example.

# Using the attr parameter as 'LOWER'

case_insensitive_matcher = PhraseMatcher(nlp.vocab, attr="LOWER")

# Creating doc & pattern

my_doc=nlp('I wish to visit new york city')

terms=['New York']

pattern=[nlp(term) for term in terms]

# adding pattern to the matcher

case_insensitive_matcher.add("matcher",None,*pattern)

# applying matcher to the doc

my_matches=case_insensitive_matcher(my_doc)

for match_id,start,end in my_matches:

span=my_doc[start:end]

print(span.text)

#> new york

You can observe that irrespective the difference in the case, the phrase was successfully matched.

Let’s see a more useful case.

If you set the attr='SHAPE', then matching will be based on the shape of the terms in pattern .

This can be used to match URLs, dates of specific format, time-formats, where the shape will be same. Let us consider a text having information about various radio channels.

You want to extract the channels (in the form of ddd.d)

my_doc = nlp('From 8 am , Mr.X will be speaking on your favorite chanel 191.1. Afterward there shall be an exclusive interview with actor Vijay on channel 194.1 . Hope you are having a great day. Call us on 666666')

Let us create the pattern. You need to pass an example radio channel of the desired shape as pattern to the matcher.

pattern=nlp('154.6')

Your pattern is ready , now initialize the PhraseMatcher with attribute set as "SHAPE".. Then add the pattern to matcher.

# Initializing the matcher and adding pattern

pincode_matcher= PhraseMatcher(nlp.vocab,attr="SHAPE")

pincode_matcher.add("pincode_matching", None, pattern)

You can apply the matcher to your doc as usual and print the matching phrases.

# Applying matcher on doc

matches = pincode_matcher(my_doc)

# Printing the matched phrases

for match_id, start, end in matches:

span = my_doc[start:end]

print(span.text)

#> 191.1

#> 194.1

Above output has successfully printed the mentioned radio-channel stations.

Entity Ruler

Entity Ruler is intetesting and very useful.

While trying to detect entities, some times certain names or organizations are not recognized by default. It might be because they are small scale or rare. Wouldn’t it be better to improve accuracy of our doc.ents_ method ?

spaCy provides a more advanced component EntityRuler that let’s you match named entities based on pattern dictionaries. Overall, it makes Named Entity Recognition more efficient.

It is a pipeline supported component and can be imported as shown below .

from spacy.pipeline import EntityRuler

Initialize the EntityRuler as shown below

# Initialize

ruler = EntityRuler(nlp)

What type of patterns do you pass to the EntityRuler ?

Basically, you need to pass a list of dictionaries, where each dictionary represents a pattern to be matched.

Each dictionary has two keys "label" and "pattern".

label: Holds the entity type as values eg: PERSON, GPE, etcpattern: Holds the the matcher pattern as values eg: John, Calcutta, etc

For example, let us consider a situation where you want to add certain book names under the entity label WORK_OF_ART.

What will be your pattern ?

My label will be WORK_OF_ART and pattern will contain the book names I wish to add. Below code demonstrates the same.

pattern=[{"label": "WORK_OF_ART", "pattern": "My guide to statistics"}]

You can add pattern to the ruler through add_patterns() function

ruler.add_patterns(pattern)

How can you apply the EntityRuler to your text ?

You can add it to the nlp model through add_pipe() function. It Adds the ruler component to the processing pipeline

# Add entity ruler to the NLP pipeline.

# NLP pipeline is a sequence of NLP tasks that spaCy performs for a given text

# More on pipelines coming in future section in this post.

nlp.add_pipe(ruler)

Now , the EntityRuler is incorporated into nlp. You can pass the text document to nlp to create a spacy doc . As the ruler is already added, by default “My guide to statistics” will be recognized as named entities under category WORK_OF_ART.

You can verify it through below code

# Extract the custom entity type

doc = nlp(" I recently published my work fanfiction by Dr.X . Right now I'm studying the book of my friend .You should try My guide to statistics for clear concepts.")

print([(ent.text, ent.label_) for ent in doc.ents])

#> [('My guide to statistics', 'WORK_OF_ART')]

You have successfuly enhanced the named entity recoginition. It is possible to train spaCy to detect new entities it has not seen as well.

EntityRuler has many amazing features, you’ll run into them later in this article.

14. Word Vectors and similarity

Word Vectors are numerical vector representations of words and documents. The numeric form helps understand the semantics about the word and can be used for NLP tasks such as classification.

Because, vector representation of words that are similar in meaning and context appear closer together.

spaCy models support inbuilt vectors that can be accessed through directly through the attributes of Token and Doc. How can you check if the model supports tokens with vectors ?

First, load a spaCy model of your choice. Here, I am using the medium model for english en_core_web_md. Next, tokenize your text document with nlp boject of spacy model.

You can check if a token has in-buit vector through Token.has_vector attribute.

!python -m spacy download en_core_web_md

# Check if word vector is available

import spacy

# Loading a spacy model

nlp = spacy.load("en_core_web_md")

tokens = nlp("I am an excellent cook")

for token in tokens:

print(token.text ,' ',token.has_vector)

#> I True

#> am True

#> an True

#> excellent True

#> cook True

You can see that all tokens in above text have a vector. It is because these words are pre-existing or the model has been trained on them. Let’s see what is the result when the text has some non-existent / made up word .

# Check if word vector is available

tokens=nlp("I wish to go to hogwarts lolXD ")

for token in tokens:

print(token.text,' ',token.has_vector)

#> I True

#> wish True

#> to True

#> go True

#> to True

#> hogwarts True

#> lolXD False

The word “lolXD” is not a part of the model’s vocabulary, hence it does not have a vector.

How to access the vector of the tokens?

You can access through token.vector method. Also ,token.vector_norm attribute stores L2 norm of the token’s vector representation.

# Extract the word Vector

tokens=nlp("I wish to go to hogwarts lolXD ")

for token in tokens:

print(token.text,' ',token.vector_norm)

#> I 6.4231944

#> wish 5.1652417

#> to 4.74484

#> go 5.05723

#> to 4.74484

#> hogwarts 7.4110312

#> lolXD 0.0

You can notice that when vector is not present for a token, the value of vector_norm is 0 for it.

Identifying similarity of two words or tokens is very crucial . It is the base to many everyday NLP tasks like text classification , recommendation systems, etc.. It is necessary to know how similar two sentences are , so they can be grouped in same or opposite category.

How to find similarity of two tokens?

Every Doc or Token object has the function similarity(), using which you can compare it with another doc or token.

Know about cosine similarity.

It returns a float value. Higher the value is, more similar are the two tokens or documents.

# Compute Similarity

token_1=nlp("bad")

token_2=nlp("terrible")

similarity_score=token_1.similarity(token_2)

print(similarity_score)

#> 0.7739191815858104

That is how you use the similarity function.

Let me show you an example of how similarity() function on docs can help in text categorization.

review_1=nlp(' The food was amazing')

review_2=nlp('The food was excellent')

review_3=nlp('I did not like the food')

review_4=nlp('It was very bad experience')

score_1=review_1.similarity(review_2)

print('Similarity between review 1 and 2',score_1)

score_2=review_3.similarity(review_4)

print('Similarity between review 3 and 4',score_2)

#> Similarity between review 1 and 2 0.9566212627033192

#> Similarity between review 3 and 4 0.8461898618188776

You can see that first two reviews have high similarity score and hence will belong in the same category(positive).

You can also check if two tokens or docs are related (includes both similar side and opposite sides) or completely irrelevant.

# Compute Similarity between texts

pizza=nlp('pizza')

burger=nlp('burger')

chair=nlp('chair')

print('Pizza and burger ',pizza.similarity(burger))

print('Pizza and chair ',pizza.similarity(chair))

#> Pizza and burger 0.7269758865234512

#> Pizza and chair 0.1917966191121549

You can observe that pizza and burger are both food items and have good similarity score.

Whereas, pizza and chair are completely irrelevant and score is very low.

15. Merging and Splitting Tokens with retokenize

When nlp object is called on a text document, spaCy first tokenizes the text to produce a Docobject. The Tokenizer is the pipeline component responsible for segmenting the text into tokens.

Sometime tokenization splits a combined word into two tokens instead of keeping it as one unit.

Consider the below case, you have a text document on a film ‘John Wick’.

# Printing tokens of a text

text="John Wick is a 2014 American action thriller film directed by Chad Stahelski"

doc=nlp(text)

for token in doc:

print(token.text)

#> John

#> Wick

#> is

#> a

#> 2014

#> American

#> action

#> thriller

#> film

#> directed

#> by

#> Chad

#> Stahelski

You can see from the output that ‘John’ and ‘Wick’ have been recognized as separate tokens. Same goes for the director’s name “Chad Stahelski”

But in this case, it would make it easier if “John Wick” was considered a single token.

So, How to combine the tokens?

spaCy provides Doc.retokenize , a context manager that allows you to merge and split tokens. For merging two or more tokens , you can make use of the retokenizer.merge() function.

How to use the retokenizer.merge() ?

The input arguments shall be:

span: You can pass a span, which contains the slice of doc you wanted to be treated as a single token. In this case, John wick is stored in a span and passed as input.span=doc[0:2]attrs: You can use it to set attributes to set on the merged token. Here, I want to set thePOS(part of speech tag) for “John Wick” asPROPN.(proper noun). You can useattrs={"POS" : "PROPN"}to achieve it.

# Using retokenizer.merge()

with doc.retokenize() as retokenizer:

attrs = {"POS": "PROPN"}

retokenizer.merge(doc[0:2], attrs=attrs)

for token in doc:

print(token.text)

#> John Wick

#> is

#> a

#> 2014

#> American

#> action

#> thriller

#> film

#> directed

#> by

#> Chad

#> Stahelski

You can also verify if John wick has been assigned ‘PROPN’ pos tag through below code.

# Printing tokens after merging

for token in doc:

print(token.text,token.pos_)

#> John Wick PROPN

#> is AUX

#> a DET

#> 2014 NUM

#> American ADJ

#> action NOUN

#> thriller NOUN

#> film NOUN

#> directed VERB

#> by ADP

#> Chad PROPN

#> Stahelski PROPN

The attribute has been added correctly.

You have seen how to merge tokens. Now, let us have a look at how to split tokens. Consider below text.

text = 'I purchased the trendy OnePlus7'

What if you want to store the versions ‘7T’ and ‘5T’ as seperate tokens. How can you split the tokens ?

spaCy provides retokenzer.split() method to serve this purpose.

The input parameters are :

token: The token of the doc which has to be splitorths: A list of texts, matching the original token. This is to tell the retokinzer how to split the tokenheads: List of token or (token, subtoken) tuples specifying the tokens to attach the newly split subtokens to.attrs: You can pass a dictionary to set attributes on all split tokens. Attribute names mapped to list of per-token attribute values.

# Splitting tokens using retokenizer.split()

doc=nlp('I purchased the trendy OnePlus7 ')

with doc.retokenize() as retokenizer:

heads = [(doc[3], 1), doc[2]]

retokenizer.split(doc[4], ["OnePlus", "7"],heads=heads)

for token in doc:

print(token.text)

#> I

#> purchased

#> the

#> trendy

#> OnePlus

#> 7

16. spaCy pipelines

You have used tokens and docs in many ways till now. In this section, let’s dive deeper and understand the basic pipeline behind this.

When you call the nlp object on spaCy, the text is segmented into tokens to create a Doc object. Following this, various process are carried out on the Doc to add the attributes like POS tags, Lemma tags, dependency tags,etc..

This is referred as the Processing Pipeline

What are pipeline components ?

The processing pipeline consists of components, where each component performs it’s task and passes the Processed Doc to the next component. These are called as pipeline components.

spaCy provides certain in-built pipeline components. Let’s look at them.

The built-in pipeline components of spacy are :