The logging module lets you track events when your code runs so that when the code crashes you can check the logs and identify what caused it. Log messages have a built-in hierarchy – starting from debugging, informational, warnings, error and critical messages. You can include traceback information as well. It is designed for small to large python projects with multiple modules and is highly recommended for any modular python programming. This post is a simple and clear explanation of how to use the logging module.

Logging in Python – Simplified Guide with Full Code and Examples. Photo by Andrea Reiman.

Logging in Python – Simplified Guide with Full Code and Examples. Photo by Andrea Reiman.

Content

- Why logging?

- A Basic logging Example

- The 5 levels of logging

- How to log to a file instead of the console

- How to change the logging format

- Why working with the root logger for all modules isn’t the best idea

- How to create a new logger?

- What is and How to setup a File Handler and Formatter?

- How to include traceback information in logged messages

- Exercises

- Conclusion

1. Why logging?

When you run a python script, you want to know what part of the script is getting executed and inspect what values the variables hold.

Usually, you may just ‘print()‘ out meaningful messages so you can see them in the console. And this probably all you need when you are developing small programs.

The problem is, when you use this approach on larger projects with multiple modules you want a more flexible approach.

Why?

Because, the code could go through different stages as in development, debugging, review, testing or in production.

The type of messages you want to print out during development can be very different from want you to see once it goes into production. Depending on the purpose, you want the code to print out different types of messages.

This can get cumbersome with if else and print statements. Besides, you want a certain hierarchy when it comes to printing messages.

What I mean by that is, during a certain ‘testing’ run, you want to see only warnings and error messages. Whereas during ‘debugging’, you not only want to see the warnings and error messages but also the debugging-related messages. Imagine doing this with ‘if else‘ statements on a multi-module project.

If you want to print out which module and at what time the codes were run, your code could easily get messier.

There is good news. All these issues are nicely addressed by the logging module.

Using logging, you can:

- Control message level to log only required ones

- Control where to show or save the logs

- Control how to format the logs with built-in message templates

- Know which module the messages is coming from

You might say ‘I see that logging can be useful but it seems too technical and seems to be a bit difficult to grasp‘. Well, yes, logging requires a bit of learning curve but that’s what this post is here for: make logging easy to learn.

Without further delay, let’s get right into it.

2. A Basic logging Example

Python provides an in-built logging module which is part of the python standard library. So you don’t need to install anything.

To use logging, all you need to do is setup the basic configuration using logging.basicConfig(). Actually, this is also optional. We will see about that soon.

Then, instead of print(), you call logging.{level}(message) to show the message in console.

import logging

logging.basicConfig(level=logging.INFO)

def hypotenuse(a, b):

"""Compute the hypotenuse"""

return (a**2 + b**2)**0.5

logging.info("Hypotenuse of {a}, {b} is {c}".format(a=3, b=4, c=hypotenuse(a,b)))

#> INFO:root:Hypotenuse of 3, 4 is 5.0

The printed log message has the following default format: {LEVEL}:{LOGGER}:{MESSAGE}.

In the above case, the level is info, because, I called logging.info().

The logger is called root, because that is the default logger and I did not create a new one, yet.

But what is a logger anyway?

A logger is like an entity you can create and configure to log different type and format of messages.

You can configure a logger that prints to the console and another logger that sends the logs to a file, has a different logging level and is specific to a given module. More explanations and examples coming up on this.

Finally, the message is the string I passed to logging.info().

Now, what would have happened had you not setup logging.basicConfig(level=logging.INFO)?

Answer: The log would not have been printed.

Why?

To know that let’s understand the levels of logging.

3. The 5 levels of logging

logging has 5 different hierarchical levels of logs that a given logger may be configured to.

Let’s see what the python docs has to say about each level:

- DEBUG: Detailed information, for diagnosing problems. Value=10.

- INFO: Confirm things are working as expected. Value=20.

- WARNING: Something unexpected happened, or indicative of some problem. But the software is still working as expected. Value=30.

- ERROR: More serious problem, the software is not able to perform some function. Value=40

- CRITICAL: A serious error, the program itself may be unable to continue running. Value=50

Now, coming back to the previous question of what would have happened had you not setup logging.basicConfig(level=logging.INFO) in the previous example.

The answer is: the log would not have been printed because, the default logger is the ‘root’ and its default basicConfig level is ‘WARNING’. That means, only messages from logging.warning() and higher levels will get logged.

So, the message of logging.info() would not be printed. And that is why the basic config was set as INFO initially (in logging.basicConfig(level=logging.INFO)).

Had I set the level as logging.ERROR instead, only message from logging.error and logging.critical will be logged. Clear?

import logging

logging.basicConfig(level=logging.WARNING)

def hypotenuse(a, b):

"""Compute the hypotenuse"""

return (a**2 + b**2)**0.5

kwargs = {'a':3, 'b':4, 'c':hypotenuse(3, 4)}

logging.debug("a = {a}, b = {b}".format(**kwargs))

logging.info("Hypotenuse of {a}, {b} is {c}".format(**kwargs))

logging.warning("a={a} and b={b} are equal".format(**kwargs))

logging.error("a={a} and b={b} cannot be negative".format(**kwargs))

logging.critical("Hypotenuse of {a}, {b} is {c}".format(**kwargs))

#> WARNING:root:a=3 and b=3 are equal

#> ERROR:root:a=-1 and b=4 cannot be negative

#> CRITICAL:root:Hypotenuse of a, b is 5.0

4. How to log to a file instead of the console

To send the log messages to a file from the root logger, you need to set the file argument in logging.basicConfig()

import logging

logging.basicConfig(level=logging.INFO, file='sample.log')

Now all subsequent log messages will go straight to the file ‘sample.log’ in your current working directory. If you want to send it to a file in a different directory, give the full file path.

5. How to change the logging format

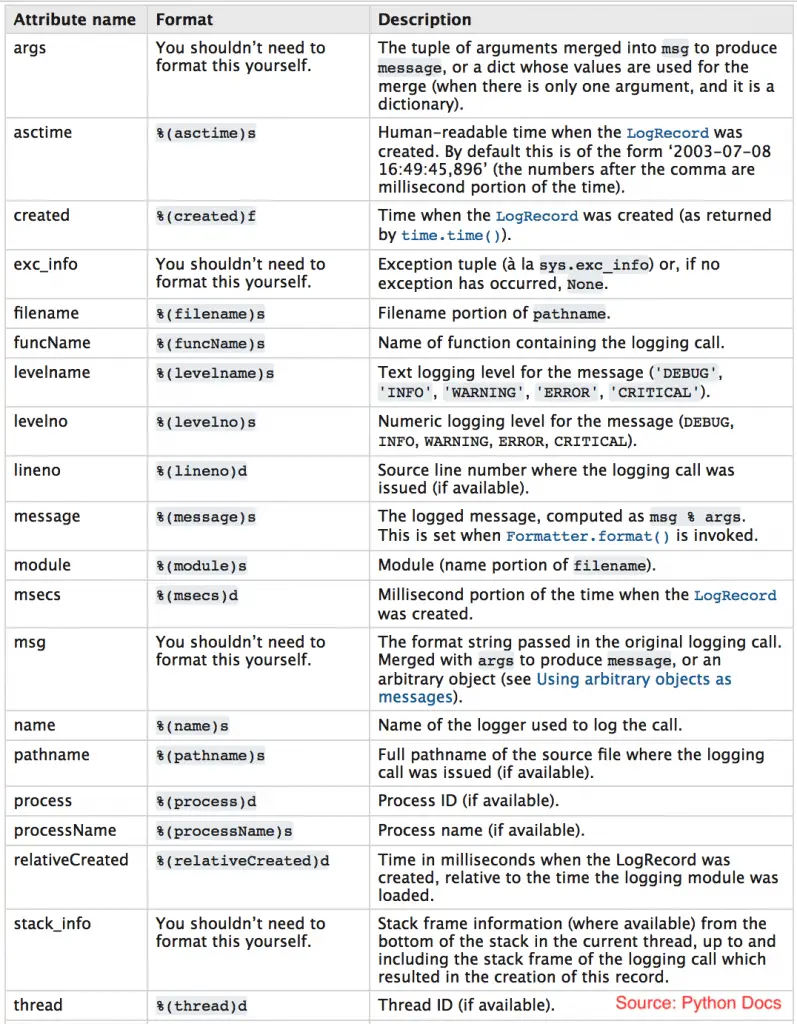

The logging module provides shorthands to add various details to the logged messages. The below image from Python docs shows that list.

Let’s change the log message format to show the TIME, LEVEL and the MESSAGE. To do that just add the format to logging.basiconfig()‘s format argument.

import logging

logging.basicConfig(level=logging.INFO, format='%(asctime)s :: %(levelname)s :: %(message)s')

logging.info("Just like that!")

#> 2019-02-17 11:40:38,254 :: INFO :: Just like that!

6. Why working with the root logger for all modules isn’t the best idea

Because they all will share the same ‘root’ logger.

But why is that bad?

Let’s look at the below code:

# 1. code inside myprojectmodule.py

import logging

logging.basicConfig(file='module.log')

#-----------------------------

# 2. code inside main.py (imports the code from myprojectmodule.py)

import logging

import myprojectmodule # This runs the code in myprojectmodule.py

logging.basicConfig(file='main.log') # No effect, because!

Imagine you have one or more modules in your project. And these modules use the basic root module. Then, when importing the module (‘myprojectmodule.py‘), all of that module’s code will run and logger gets configured.

Once configured, the root logger in the main file (that imported the ‘myprojectmodule‘ module) will no longer be able to change the root logger settings. Because, the logging.basicConfig() once set cannot be changed.

That means, if you want to log the messages from myprojectmodule to one file and the logs from the main module in another file, root logger can’t that.

To do that you need to create a new logger.

7. How to create a new logger?

You can create a new logger using the ‘logger.getLogger(name)‘ method. If a logger with the same name exists, then that logger will be used.

While you can give pretty much any name to the logger, the convention is to use the __name__ variable like this:

logger = logging.getLogger(__name__)

logger.info('my logging message')

But, why use __name__ as the name of the logger, instead of hardcoding a name?

Because the __name__ variable will hold the name of the module (python file) that called the code. So, when used inside a module, it will create a logger bearing the value provided by the module’s __name__ attribute.

By doing this, if you end up changing module name (file name) in future, you don’t have to modify the internal code.

Now, once you’ve created a new logger, you should remember to log all your messages using the new logger.info() instead of the root’s logging.info() method.

Another aspect to note is, all the loggers have a built-in hierarchy to it.

What do I mean by that?

For example, if you have configured the root logger to log messages to a particular file. You also have a custom logger for which you have not configured the file handler to send messages to console or another log file.

In this case, the custom logger will fallback and write to the file set by the root logger itself. Until and unless you configure the log file of your custom logger.

So what is a file handler and how to set one up?

8. What is and How to set up a File Handler and Formatter?

The FileHandler() and Formatter() classes are used to setup the output file and the format of messages for loggers other than the root logger.

Do you remember how we setup the filename and the format of the message in the root logger (inside logging.basicConfig()) earlier?

We just specified the filename and format parameters in logging.basicConfig() and all subsequent logs went to that file.

However, when you create a separate logger, you need to set them up individually using the logging.FileHandler() and logging.Formatter() objects.

A FileHandler is used to make your custom logger to log in to a different file. Likewise, a Formatter is used to change the format of your logged messages.

import logging

# Gets or creates a logger

logger = logging.getLogger(__name__)

# set log level

logger.setLevel(logging.WARNING)

# define file handler and set formatter

file_handler = logging.FileHandler('logfile.log')

formatter = logging.Formatter('%(asctime)s : %(levelname)s : %(name)s : %(message)s')

file_handler.setFormatter(formatter)

# add file handler to logger

logger.addHandler(file_handler)

# Logs

logger.debug('A debug message')

logger.info('An info message')

logger.warning('Something is not right.')

logger.error('A Major error has happened.')

logger.critical('Fatal error. Cannot continue')

Notice how we set the formatter on the ‘file_handler‘ and not the ‘logger‘ directly.

Assuming the above code is run from the main program, if you look inside the working directory, a file named logfile.log will be created if it doesn’t exist and would contain the below messages.

#> 2019-02-17 12:40:14,797 : WARNING : __main__ : Something is not right.

#> 2019-02-17 12:40:14,798 : ERROR : __main__ : A Major error has happened.

#> 2019-02-17 12:40:14,798 : CRITICAL : __main__ : Fatal error. Cannot continue

Note again, the Formatter is set on the FileHandler object and not directly on the logger. Something you may want to get used to.

9. How to include traceback information in logged messages

Besides ‘debug‘, ‘info‘, ‘warning‘, ‘error‘, and ‘critical‘ messages, you can log exceptions that will include any associated traceback information.

With logger.exception, you can log traceback information should the code encounter any error. logger.exception will log the message provided in its arguments as well as the error message traceback info.

Below is a nice example.

import logging

# Create or get the logger

logger = logging.getLogger(__name__)

# set log level

logger.setLevel(logging.INFO)

def divide(x, y):

try:

out = x / y

except ZeroDivisionError:

logger.exception("Division by zero problem")

else:

return out

# Logs

logger.error("Divide {x} / {y} = {c}".format(x=10, y=0, c=divide(10,0)))

#> ERROR:__main__:Division by zero problem

#> Traceback (most recent call last):

#> File "<ipython-input-16-a010a44fdc0a>", line 12, in divide

#> out = x / y

#> ZeroDivisionError: division by zero

#> ERROR:__main__:None

10. Exercises

- Create a new project directory and a new python file named ‘

example.py‘. Import the logging module and configure the root logger to the level of ‘debug’ messages. Log an ‘info’ message with the text: “This is root logger’s logging message!”. -

Configure the root logger to format the message “This is root logger’s logging message!” as the following:

#> 2019-03-03 17:18:32,703 :: INFO :: Module <stdin> :: Line No 1 :: This is root logger's logging message!

import logging

logging.basicConfig(level=logging.INFO, format='%(asctime)s :: %(levelname)s :: Module %(module)s :: Line No %(lineno)s :: %(message)s')

logging.info("This is root logger's logging mesage!")

- Create another python file in the same directory called ‘

mymod.py‘ and create a new logger bearing the module’s name. Configure it to the level of ‘error’ messages and make it send the log outputs to a file called “mymod_{current_date}.log”. -

From ‘mymod’ logger created above, log the following ‘critical’ message to the said log file: “This is a critical message!. Don’t ignore it”.

11. Conclusion

Many congratulations if you were able to solve the exercises!

That was quite useful and straightforward wasn’t it?

logging is a great tool but is not popular is Data science workflows as it should be. I hope the logging concepts are clear and the next time you work on a python based project, my kind request for you is to remember to give the logging module a shot.

Happy logging!