Nebullvm is an open-source library that takes a deep learning model as input and outputs an optimized version that runs 5-20 times faster on your machine.

Nebullvm tests multiple deep learning compilers to identify the best possible way to execute your model on your specific hardware, without impacting the accuracy of your model (GitHub link).

The goal of nebullvm is to let any developer benefit from deep learning compilers without having to spend countless hours understanding, installing, testing and debugging this powerful technology.

Testing nebullvm on your models

Below you can find 3 notebooks where the library can be tested on the most popular AI frameworks Tensorflow, Pytorch and Hugging Face.

The notebooks will run locally on your hardware so you can get an idea of the performance you would achieve with nebullvm on your AI models.

Note that it may take a few minutes to install the library the first time, as the library also installs the deep learning compilers responsible for optimization.

Benchmarks

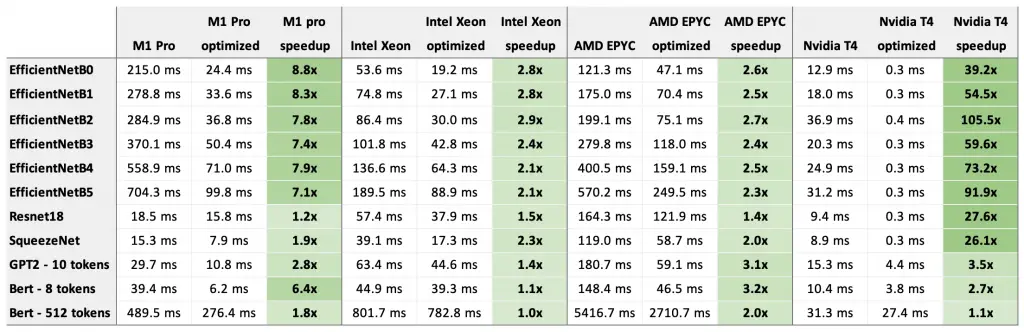

We have tested nebullvm on popular AI models and hardware from leading vendors.

- Hardware: M1 Pro, NVIDIA T4, Intel Xeon, AMD EPYC

- AI Models: EfficientNet, Resnet, SqueezeNet, BERT, GPT2

The table below shows the response time in milliseconds (ms) of the non-optimized model and the optimized model for the various model-hardware couplings as an average value over 100 experiments. It also displays the speedup provided by nebullvm, where speedup is defined as the response time of the optimized model over the response time of the non-optimized model.

At first glance, we can observe that speedup varies greatly across hardware-model couplings. Overall, the library provides great positive results, most ranging from 2 to 30+ times speedup.

To summarize, the results are:

- Nebullvm provides positive acceleration to non-optimized AI models

- These early results show poorer (yet positive) performance on Hugging Face models. Support for Hugging Face has just been released and improvements will be included in future versions

- The library provides a ~2–3x boost on Intel and AMD hardware. These results are most likely related to an already highly optimized implementation of PyTorch for x86 devices

- Nebullvm delivers extremely good performance on NVIDIA machines

- The library provides great performances also on Apple M1 chips And across all scenarios, nebullvm proves to be very useful due to its ease of use.

More about nebullvm

Nebullvm is an open-source library that can be found on GitHub. It was developed by Nebuly and has received 1000 stars on GitHub in the first month since its launch and more than 2500 downloads. The main contributor to the library is Diego Fiori, the CTO of Nebuly.

The library is continuously expanding with new features and capabilities, all aimed at enabling developers to deploy optimized AI models.